SAI #02: Feature Store, Splittable vs. Non-Splittable Files and more...

Splittable vs Non-Splittable Files, CDC (Change Data Capture), Machine Learning Pipeline, Feature Store.

Data Engineering Fundamentals + or What Every Data Engineer Should Know

👋 This is Aurimas. I write the weekly SAI Newsletter where my goal is to present complicated Data related concepts in a simple and easy to digest way. The goal is to help You UpSkill in Data Engineering, MLOps, Machine Learning and Data Science areas.

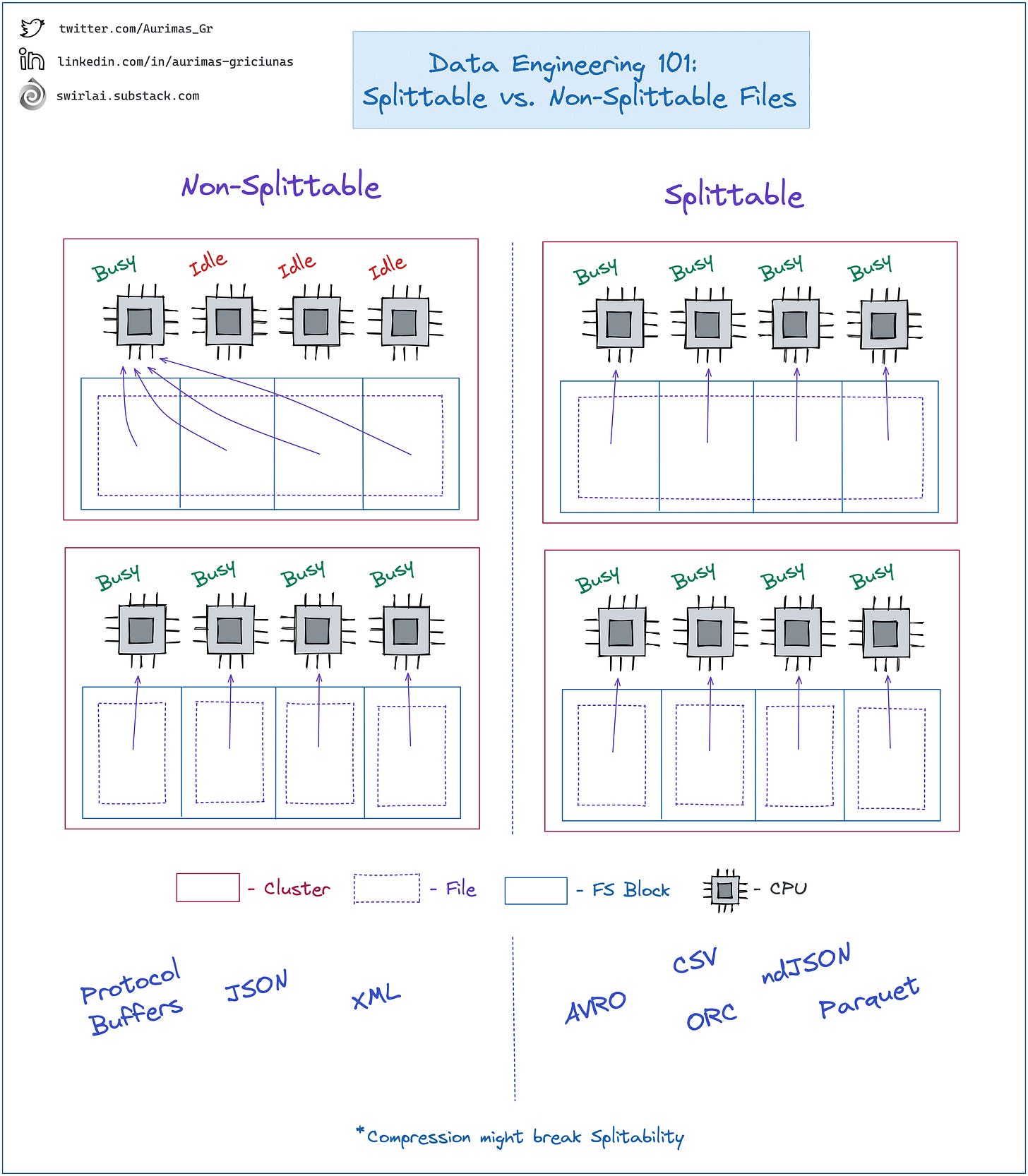

𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 𝘃𝘀. 𝗡𝗼𝗻-𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 𝗙𝗶𝗹𝗲𝘀.

You are very likely to run into a 𝗗𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗲𝗱 𝗖𝗼𝗺𝗽𝘂𝘁𝗲 𝗦𝘆𝘀𝘁𝗲𝗺 𝗼𝗿 𝗙𝗿𝗮𝗺𝗲𝘄𝗼𝗿𝗸 in your career. It could be 𝗦𝗽𝗮𝗿𝗸, 𝗛𝗶𝘃𝗲, 𝗣𝗿𝗲𝘀𝘁𝗼 or any other.

Also, it is very likely that these Frameworks would be reading data from a distributed storage. It could be 𝗛𝗗𝗙𝗦, 𝗦𝟯 etc.

These Frameworks utilize multiple 𝗖𝗣𝗨 𝗖𝗼𝗿𝗲𝘀 𝗳𝗼𝗿 𝗟𝗼𝗮𝗱𝗶𝗻𝗴 𝗗𝗮𝘁𝗮 and performing 𝗗𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗲𝗱 𝗖𝗼𝗺𝗽𝘂𝘁𝗲 in parallel.

How files are stored in your 𝗦𝘁𝗼𝗿𝗮𝗴𝗲 𝗦𝘆𝘀𝘁𝗲𝗺 𝗶𝘀 𝗞𝗲𝘆 for utilizing distributed 𝗥𝗲𝗮𝗱 𝗮𝗻𝗱 𝗖𝗼𝗺𝗽𝘂𝘁𝗲 𝗘𝗳𝗳𝗶𝗰𝗶𝗲𝗻𝘁𝗹𝘆.

𝗦𝗼𝗺𝗲 𝗱𝗲𝗳𝗶𝗻𝗶𝘁𝗶𝗼𝗻𝘀:

➡️ 𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 𝗙𝗶𝗹𝗲𝘀 are Files that can be partially read by several processes at the same time.

➡️ In distributed file or block storages files are stored in chunks called blocks.

➡️ Block sizes will vary between different storage systems.

𝗧𝗵𝗶𝗻𝗴𝘀 𝘁𝗼 𝗸𝗻𝗼𝘄:

➡️ If your file is 𝗡𝗼𝗻-𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 and is bigger than a block in storage - it will be split between blocks but will only be read by a 𝗦𝗶𝗻𝗴𝗹𝗲 𝗖𝗣𝗨 𝗖𝗼𝗿𝗲 which might cause 𝗜𝗱𝗹𝗲 𝗖𝗣𝗨 time.

➡️ If your file is 𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 - multiple cores can read it at the same time (one core per block).

𝗦𝗼𝗺𝗲 𝗴𝘂𝗶𝗱𝗮𝗻𝗰𝗲:

➡️ If possible - prefer 𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 𝗙𝗶𝗹𝗲 types.

➡️ If you are forced to use 𝗡𝗼𝗻-𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 files - manually partition them into sizes that would fit into a single FS Block to utilize more CPU Cores.

𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 𝗳𝗶𝗹𝗲 𝗳𝗼𝗿𝗺𝗮𝘁𝘀:

👉 𝗔𝘃𝗿𝗼.

👉 𝗖𝗦𝗩.

👉 𝗢𝗥𝗖.

👉 𝗻𝗱𝗝𝗦𝗢𝗡.

👉 𝗣𝗮𝗿𝗾𝘂𝗲𝘁.

𝗡𝗼𝗻-𝗦𝗽𝗹𝗶𝘁𝘁𝗮𝗯𝗹𝗲 𝗳𝗶𝗹𝗲 𝗳𝗼𝗿𝗺𝗮𝘁𝘀:

👉 𝗣𝗿𝗼𝘁𝗼𝗰𝗼𝗹 𝗕𝘂𝗳𝗳𝗲𝗿𝘀.

👉 𝗝𝗦𝗢𝗡.

👉 𝗫𝗠𝗟.

[𝗜𝗠𝗣𝗢𝗥𝗧𝗔𝗡𝗧] Compression might break splitability, more on it next time.

𝗖𝗗𝗖 (𝗖𝗵𝗮𝗻𝗴𝗲 𝗗𝗮𝘁𝗮 𝗖𝗮𝗽𝘁𝘂𝗿𝗲).

𝗖𝗵𝗮𝗻𝗴𝗲 𝗗𝗮𝘁𝗮 𝗖𝗮𝗽𝘁𝘂𝗿𝗲 is a software process used to replicate actions performed against 𝗢𝗽𝗲𝗿𝗮𝘁𝗶𝗼𝗻𝗮𝗹 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲𝘀 for use in downstream applications.

𝗧𝗵𝗲𝗿𝗲 𝗮𝗿𝗲 𝘀𝗲𝘃𝗲𝗿𝗮𝗹 𝘂𝘀𝗲 𝗰𝗮𝘀𝗲𝘀 𝗳𝗼𝗿 𝗖𝗗𝗖. 𝗧𝘄𝗼 𝗼𝗳 𝘁𝗵𝗲 𝗺𝗮𝗶𝗻 𝗼𝗻𝗲𝘀:

➡️ 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲 𝗥𝗲𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻 (refer to 3️⃣ in the Diagram).

👉 𝗖𝗗𝗖 can be used for moving transactions performed against 𝗦𝗼𝘂𝗿𝗰𝗲 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲 to a 𝗧𝗮𝗿𝗴𝗲𝘁 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲. If each transaction is replicated - it is possible to retain all ACID guarantees when performing replication.

👉 𝗥𝗲𝗮𝗹 𝘁𝗶𝗺𝗲 𝗖𝗗𝗖 is extremely valuable here as it enables 𝗭𝗲𝗿𝗼 𝗗𝗼𝘄𝗻𝘁𝗶𝗺𝗲 𝗦𝗼𝘂𝗿𝗰𝗲 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲 𝗥𝗲𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻 𝗮𝗻𝗱 𝗠𝗶𝗴𝗿𝗮𝘁𝗶𝗼𝗻. E.g It is extensively used when migrating 𝗼𝗻-𝗽𝗿𝗲𝗺 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲𝘀 serving 𝗖𝗿𝗶𝘁𝗶𝗰𝗮𝗹 𝗔𝗽𝗽𝗹𝗶𝗰𝗮𝘁𝗶𝗼𝗻𝘀 that can not be shut down for a moment to the cloud.

➡️ Facilitation of 𝗗𝗮𝘁𝗮 𝗠𝗼𝘃𝗲𝗺𝗲𝗻𝘁 𝗳𝗿𝗼𝗺 𝗢𝗽𝗲𝗿𝗮𝘁𝗶𝗼𝗻𝗮𝗹 𝗗𝗮𝘁𝗮𝗯𝗮𝘀𝗲𝘀 𝘁𝗼 𝗗𝗮𝘁𝗮 𝗟𝗮𝗸𝗲𝘀 (refer to 1️⃣ in the Diagram) 𝗼𝗿 𝗗𝗮𝘁𝗮 𝗪𝗮𝗿𝗲𝗵𝗼𝘂𝘀𝗲𝘀 (refer to 2️⃣ in the Diagram) 𝗳𝗼𝗿 𝗔𝗻𝗮𝗹𝘆𝘁𝗶𝗰𝘀 𝗽𝘂𝗿𝗽𝗼𝘀𝗲𝘀.

👉 There are currently two Data movement patterns widely applied in the industry: 𝗘𝗧𝗟 𝗮𝗻𝗱 𝗘𝗟𝗧.

👉 𝗜𝗻 𝘁𝗵𝗲 𝗰𝗮𝘀𝗲 𝗼𝗳 𝗘𝗧𝗟 - data extracted by CDC can be transformed on the fly and eventually pushed to the Data Lake or Data Warehouse.

👉 𝗜𝗻 𝘁𝗵𝗲 𝗰𝗮𝘀𝗲 𝗼𝗳 𝗘𝗟𝗧 - Data is replicated to the Data Lake or Data Warehouse as is and Transformations performed inside of the System.

𝗧𝗵𝗲𝗿𝗲 𝗶𝘀 𝗺𝗼𝗿𝗲 𝘁𝗵𝗮𝗻 𝗼𝗻𝗲 𝘄𝗮𝘆 𝗼𝗳 𝗵𝗼𝘄 𝗖𝗗𝗖 𝗰𝗮𝗻 𝗯𝗲 𝗶𝗺𝗽𝗹𝗲𝗺𝗲𝗻𝘁𝗲𝗱, 𝘁𝗵𝗲 𝗺𝗲𝘁𝗵𝗼𝗱𝘀 𝗮𝗿𝗲 𝗺𝗮𝗶𝗻𝗹𝘆 𝘀𝗽𝗹𝗶𝘁 𝗶𝗻𝘁𝗼 𝘁𝗵𝗿𝗲𝗲 𝗴𝗿𝗼𝘂𝗽𝘀:

➡️ 𝗣𝘂𝗹𝗹 𝗕𝗮𝘀𝗲𝗱 𝗖𝗗𝗖

👉 A client queries the Source Database and pushes data into the Target Database.

❗️Downside 1: There is a need to augment all of the source tables to include indicators that a record has changed.

❗️Downside 2: Usually - not a real time CDC, it might be performed hourly, daily etc.

❗️Downside 3: Source Database suffers high load when CDC is being performed.

❗️Downside 4: It is extremely challenging to replicate Delete events.

➡️ 𝗣𝘂𝘀𝗵 𝗕𝗮𝘀𝗲𝗱 𝗖𝗗𝗖

👉 Triggers are set up in the Source Database. Whenever a change event happens in the Database - it is pushed to a target system.

❗️ Downside 1: This approach usually causes highest database load overhead.

✅ Upside 1: Real Time CDC.

➡️ 𝗟𝗼𝗴 𝗕𝗮𝘀𝗲𝗱 𝗖𝗗𝗖

👉 Transactional Databases have all of the events performed against the Database logged in the transaction log for recovery purposes.

👉 A Transaction Miner is mounted on top of the logs and pushes selected events into a Downstream System. Popular implementation - Debezium.

❗️ Downside 1: More complicated to set up.

❗️ Downside 2: Not all Databases will have open source connectors.

✅ Upside 1: Least load on the Database.

✅ Upside 2: Real Time CDC.

MLOps Fundamentals or What Every Machine Learning Engineer Should Know

𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲.

𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲𝘀 are extremely important because they bring the automation aspect into the day to day work of 𝗗𝗮𝘁𝗮 𝗦𝗰𝗶𝗲𝗻𝘁𝗶𝘀𝘁𝘀 and 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝘀. Pipelines should be reusable and robust. Working in Pipelines allows you to scale the number of Machine Learning models that can be maintained in production concurrently.

Usual Pipeline consists of the following steps:

1️⃣ 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗥𝗲𝘁𝗿𝗶𝗲𝘃𝗮𝗹

👉 Ideally we retrieve Features from a Feature Store here, if not - it could be a different kind of storage.

👉 Features should not require transformations at this stage, if they do - there should be an additional step of Feature Preparation.

👉 We do Train/Validation/Test splits here.

2️⃣ 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗩𝗮𝗹𝗶𝗱𝗮𝘁𝗶𝗼𝗻

👉 You would hope to have 𝗖𝘂𝗿𝗮𝘁𝗲𝗱 𝗗𝗮𝘁𝗮 at this point but errors can slip through. There could be a missing timeframe or 𝗗𝗮𝘁𝗮 could be coming late.

👉 You should perform 𝗣𝗿𝗼𝗳𝗶𝗹𝗲 𝗖𝗼𝗺𝗽𝗮𝗿𝗶𝘀𝗼𝗻 against data used during last time the pipeline was run. Any significant change in 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗗𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗶𝗼𝗻 could signal 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗗𝗿𝗶𝗳𝘁.

❗️You should act according to Validation results - if any of them breach predefined thresholds you should act accordingly, either alert responsible person or short circuit the Pipeline.

3️⃣ 𝗠𝗼𝗱𝗲𝗹 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴

👉 We train our ML Model using 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴 𝗗𝗮𝘁𝗮𝘀𝗲𝘁 from first step while validating on 𝗩𝗮𝗹𝗶𝗱𝗮𝘁𝗶𝗼𝗻 𝗗𝗮𝘁𝗮𝘀𝗲𝘁 for adequate results.

👉 If 𝗖𝗿𝗼𝘀𝘀-𝗩𝗮𝗹𝗶𝗱𝗮𝘁𝗶𝗼𝗻 is used - we do not split the Validation Set in the first step.

4️⃣ 𝗠𝗼𝗱𝗲𝗹 𝗩𝗮𝗹𝗶𝗱𝗮𝘁𝗶𝗼𝗻

👉 We calculate 𝗠𝗼𝗱𝗲𝗹 𝗣𝗲𝗿𝗳𝗼𝗿𝗺𝗮𝗻𝗰𝗲 𝗠𝗲𝘁𝗿𝗶𝗰𝘀 against the 𝗧𝗲𝘀𝘁 𝗗𝗮𝘁𝗮𝘀𝗲𝘁 that we split in the first step.

❗️You should act according to Validation results - if predefined thresholds are breached you should act accordingly, either alert responsible person or short circuit the Pipeline.

5️⃣ 𝗠𝗼𝗱𝗲𝗹 𝗦𝗲𝗿𝘃𝗶𝗻𝗴

👉 You serve the Model for Deployment by placing it into a Model Registry.

𝗦𝗼𝗺𝗲 𝗴𝗲𝗻𝗲𝗿𝗮𝗹 𝗿𝗲𝗾𝘂𝗶𝗿𝗲𝗺𝗲𝗻𝘁𝘀:

➡️ You should be able to trigger the Pipeline for retraining purposes. It could be done by the Orchestrator on a schedule, from the Experimentation Environment or by an Alerting System if any faults in Production are detected.

➡️ Pipeline steps should be glued together by Experiment Tracking System for Pipeline Run Reproducibility purposes.

𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝘁𝗼𝗿𝗲.

𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝘁𝗼𝗿𝗲 𝗦𝘆𝘀𝘁𝗲𝗺𝘀 are an extremely important concept as they sit between 𝗗𝗮𝘁𝗮 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴 𝗮𝗻𝗱 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲𝘀.

𝗧𝗵𝗲 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝘁𝗼𝗿𝗲 𝗦𝘆𝘀𝘁𝗲𝗺 𝘀𝗼𝗹𝘃𝗲𝘀 𝗳𝗼𝗹𝗹𝗼𝘄𝗶𝗻𝗴 𝗶𝘀𝘀𝘂𝗲𝘀:

➡️ Eliminates Training/Serving skew by syncing Batch and Online Serving Storages (5️⃣)

➡️ Enables Feature Sharing and Discoverability through the Metadata Layer - you define the Feature Transformations once, enable discoverability through the Feature Catalog and then serve Feature Sets for training and inference purposes trough unified interface (4️⃣, 3️⃣).

𝗧𝗵𝗲 𝗶𝗱𝗲𝗮𝗹 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝘁𝗼𝗿𝗲 𝗦𝘆𝘀𝘁𝗲𝗺 𝘀𝗵𝗼𝘂𝗹𝗱 𝗵𝗮𝘃𝗲 𝘁𝗵𝗲𝘀𝗲 𝗽𝗿𝗼𝗽𝗲𝗿𝘁𝗶𝗲𝘀:

1️⃣ 𝗜𝘁 𝘀𝗵𝗼𝘂𝗹𝗱 𝗯𝗲 𝗺𝗼𝘂𝗻𝘁𝗲𝗱 𝗼𝗻 𝘁𝗼𝗽 𝗼𝗳 𝘁𝗵𝗲 𝗖𝘂𝗿𝗮𝘁𝗲𝗱 𝗗𝗮𝘁𝗮 𝗟𝗮𝘆𝗲𝗿

👉 the Data that is being pushed into the Feature Store System should be of High Quality and meet SLAs, trying to Curate Data inside of the Feature Store System is a recipe for disaster.

👉 Curated Data could be coming in Real Time or Batch. Not all companies need Real Time Data at least when they are only starting with Machine Learning.

2️⃣ 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝘁𝗼𝗿𝗲 𝗦𝘆𝘀𝘁𝗲𝗺𝘀 𝘀𝗵𝗼𝘂𝗹𝗱 𝗵𝗮𝘃𝗲 𝗮 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗧𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗮𝘁𝗶𝗼𝗻 𝗟𝗮𝘆𝗲𝗿 𝘄𝗶𝘁𝗵 𝗶𝘁𝘀 𝗼𝘄𝗻 𝗰𝗼𝗺𝗽𝘂𝘁𝗲.

👉 In the modern Data Stack this part could be provided by the vendor or you might need to implement it yourself.

👉 The industry is moving towards a state where it becomes normal for vendors to include Feature Transformation part into their offering.

3️⃣ 𝗥𝗲𝗮𝗹 𝗧𝗶𝗺𝗲 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝗲𝗿𝘃𝗶𝗻𝗴 𝗔𝗣𝗜 - this is where you will be retrieving your Features for low latency inference. The System should provide two types of Real Time serving APIs:

👉 Get - you fetch a single Feature Vector.

👉 Batch Get - you fetch multiple Feature Vectors at the same time with Low Latency.

4️⃣ 𝗕𝗮𝘁𝗰𝗵 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝗲𝗿𝘃𝗶𝗻𝗴 𝗔𝗣𝗜 - this is where you will be fetching your Features for Batch inference and Model Training. The API should provide:

👉 Point in time Feature Retrieval - you need to be able to time travel. A Feature view fetched for a certain timestamp should always return its state at that point in time.

👉 Point in time Joins - you should be able to combine several feature sets in a specific point in time easily.

5️⃣ 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗦𝘆𝗻𝗰 - whether the Data was ingested in Real Time or Batch, the Data being Served should always be synced. Implementation of this part can vary, an example could be:

👉 Data is ingested in Real Time -> Feature Transformation Applied -> Data pushed to Low Latency Read capable Storage like Redis -> Data is Change Data Captured to Cold Storage like S3.

👉 Data is ingested in Batch -> Feature Transformation Applied -> Data is pushed to Cold Storage like S3 -> Data is made available for Real Time Serving by syncing it with Low Latency Read capable Storage like Redis.

So good, so clear... Good job 👍🙏

Hello Aurimas,

Thanks for this reading, you explained cross-cutting topics really useful 🤓