SAI #11: 5 Books for a Data Engineer of 2023, Central Role of The Model Registry and more...

5 Books for a Data Engineer of 2023, Central Role of The Model Registry, ACID Properties in DBMS.

👋 This is Aurimas. I write the weekly SAI Newsletter where my goal is to present complicated Data related concepts in a simple and easy to digest way. The goal is to help You UpSkill in Data Engineering, MLOps, Machine Learning and Data Science areas.

In this episode we cover:

5 Books for a Data Engineer of 2023.

Central Role of The Model Registry.

ACID Properties in DBMS.

5 Books for a Data Engineer of 2023

If I could only choose 5 books to read in 2023 as an aspiring Data Engineer these would be them in a specific order:

1️⃣ “Fundamentals of Data Engineering” - A book that I wish I had 5 years ago. After reading it you will understand the entire Data Engineering workflow. It will prepare you for further deep dives.

2️⃣ “Accelerate“ - Data Engineers should follow the same practices that Software Engineers do and more. After reading this book you will understand DevOps practices in and out.

3️⃣ “Designing Data-Intensive Applications” - Delve deeper into Data Engineering Fundamentals. After reading the book you will understand Storage Formats, Distributed Technologies, Distributed Consensus algorithms and more.

4️⃣ “Team Topologies” - Sometimes you might get confused about why a certain communication pattern is in place in the company you work for. After reading this book you will learn the Team Topologies model of organizational structure for fast flow. It will help you navigate the organizational dynamics and understand your role in the value chain.

5️⃣ “Data Mesh” - Data Mesh has become an extremely popular buzzword in recent years. After reading this book you will understand the intent by the author of the term herself. Don’t be the one to throw around the term without understanding its meaning deeply.

[NOTE]: All of the books above are talking about Fundamental concepts, even if you read all of them and decide that Data Engineering is not for you - you will be able to reuse the knowledge in any other Tech Role.

[ADDITIONAL NOTE]: I did not include any Fundamental Classics in the list as these can be picked up after you have already established yourself in the role.

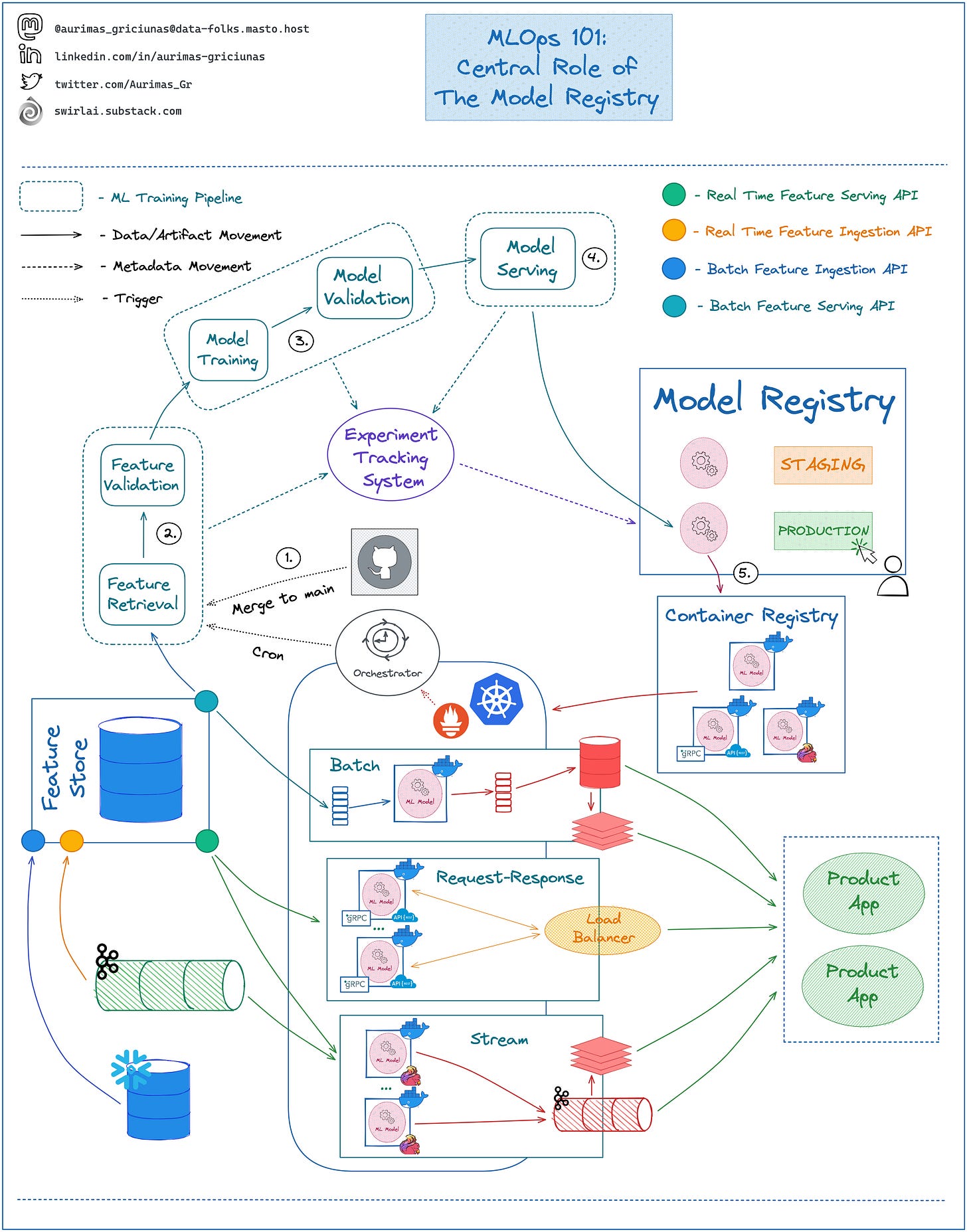

Central Role of The Model Registry

Why is Model Registry such an important element in MLOps Stack?

We have already looked into the procedure for different types of ML Model deployments.

Let’s review the model training steps:

𝟭: Version Control: Machine Learning Training Pipeline is defined in code, once merged to the main branch it is built and triggered.

𝟮: Feature Preprocessing: Features are retrieved from the Feature Store, validated and passed to the next stage. Any feature related metadata that is tightly coupled to the Model being trained is saved to the Experiment Tracking System.

𝟯: Model is trained and validated on Preprocessed Data, Model related metadata is saved to the Experiment Tracking System.

𝟰: If Model Validation passes all checks - Model Artifact is passed to a Model Registry. The model is served.

✅ This is it - regardless of what deployment type will follow, the model is served in The Model Registry. Model registry is what glues Training and Deployment Pipelines together and this is where handover of the Model Artifact happens.

𝟱: The same model can be packaged as a container for different deployment types by implementing a respective interface. E.g.

👉 Flink application for Stream Processing Deployment.

👉 gRPC API for Request-Response.

👉 Plain model pointing to the Batch Serving Feature Store API for Batch.

✅ This is good news - as long as you are training your models using historical data the training pipeline is the same for any type of deployment.

❗️ It’s a different story when it comes to online training but more on it in the future episodes so stay tuned in!

You can find a more detailed explanation of different Model Deployment procedures in one of my previous posts.

[IMPORTANT]: The Defined Flow assumes that your Pipelines are already Tested and ready to be released to Production. We’ll look into the pre-production flow in the future episode.

This is The Way.

ACID Properties in DBMS

Most likely you are taking ACID Properties for granted when you are using transactional databases.

If you are interviewing for Data Engineering roles you will be asked to explain what it means (unfortunately, I’ve seen Senior Level engineers that don’t know or understand the concept).

Let’s take a closer look.

Transaction is a sequence of steps performed on a database as a single logical unit of work.

The ACID database transaction model ensures that a performed transaction is always consistent by ensuring:

➡️ Atomicity - Each transaction is either properly carried out or the database reverts back to the state before the transaction started.

➡️ Consistency - The database must be in a consistent state before and after the transaction.

➡️ Isolation - Multiple transactions occur independently without interference.

➡️ Durability - Successful transactions are persisted even in the case of system failure.

ACID guarantees will be ensured by the most Relational DBMSes:

👉 MySQL

👉 PostgreSQL

👉 Microsoft SQL Server

👉 …

NoSQL databases usually do not conform to them - they are enforcing another transaction model called BASE. BASE guarantees eventual consistency. Example databases:

👉 Cassandra

👉 MongoDB

👉 …

It is easy to see how not having ACID properties a DBMS would become inconsistent.

Hi Aurimas, I was wondering what tool you use for the diagrams, do you use an Ipad? What Program? I'm a very visual person and I love drawing diagrams too. I tried the "Remarkable" Tool but I dont get the colors or the symbols for the technological tools. Thanks so much!!