SAI #20: Decomposing Real Time Machine Learning Service Latency.

Decomposing Real Time Machine Learning Service Latency, Stream Processing: Event vs. Processing Timestamp.

👋 This is Aurimas. I write the weekly SAI Newsletter where my goal is to present complicated Data related concepts in a simple and easy to digest way. The goal is to help You UpSkill in Data Engineering, MLOps, Machine Learning and Data Science areas.

This week in the Newsletter:

Decomposing Real Time Machine Learning Service Latency.

Stream Processing: Event vs. Processing Time.

Decomposing Real Time Machine Learning Service Latency.

How do we Decompose Real Time Machine Learning Service Latency and why should you care to understand the pieces as a ML Engineer?

Usually, what is cared about by the users of your Machine Learning Service is the total endpoint latency - the time difference between when a request is performed (1.) against the Service till when the response is received (6.).

Certain SLAs will be established on what the acceptable latency is and you will need to reach that. In order to do it most efficiently you need to know the building blocks that comprise the total latency. Being able to decompose the total latency is even more important as you can improve each piece independently. Let's see how.

Total latency of typical Machine Learning Service will be comprised of:

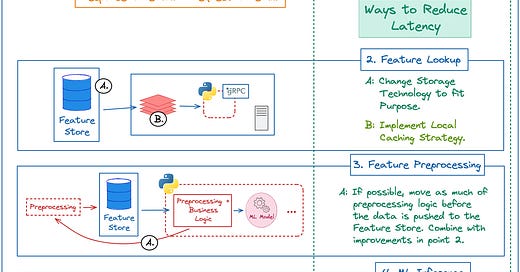

2: Feature Lookup.

You can decrease the latency here by:

A: Changing the Storage Technology to better fit the type of data you are looking up and the queries you are running against the Store.

B: Implementing aggressive caching strategies that would allow you to contain the data of most frequently queried entities locally. You could even spin up local Online Feature Stores if the data held in them are not too big (however, management and syncing of these becomes troublesome).

3: Feature Preprocessing.

Decrease latency here by:

A: If possible, moving as much of preprocessing logic before the data is pushed to the Feature Store. This allows for calculation logic to be performed once instead of doing it each time before Inference. Combine this with improvements in point 2 for largest latency improvement.

4: Machine Learning Inference.

Decrease latency here by:

A: Reducing the Model Size using techniques like Pruning, Knowledge Distillation or Quantisation (more on these in future posts).

B: If your service consists of multiple models - applying inference in parallel and combining the results instead of chaining them in sequence if possible.

These three building blocks are what is specific to a ML System, there are naturally components like Network latency and machine resources that we need to take into consideration.

Network Latency could be reduced by pulling ML Model into the backend service.

When it comes to Machine Resources - it is important to consider horizontal scaling strategies and how large your servers have to be to efficiently host the model type that you are using.

Stream Processing: Event vs. Processing Time.

Today we start off the Stream Processing topic by looking at the two main time domains that you have to consider when processing unbounded streams of data and what problems are associated with them.

An event in this context is a piece of information that was generated by a specific action and delivered to the Data System.

1: Event Time - the time when the event actually occurred. E.g. when a click happened on the website.

2: Processing Time - the time when the event was observed in the Streaming Data System. E.g. when the event was read from a Kafka Topic by a Flink Application.

Event Lag

The ideal scenario would be if we could process events as soon as they happen. Unfortunate truth is that there is always a time lag between when the event occurs and when it is processed due to various reasons, e.g. network congestion, throughput limitations.

The reality can be represented by the following intuitive graph:

We place Event and Processing Times on X and Y axes respectively.

Black dashed line represents the ideal scenario: Event Time = Processing Time.

Purple Line is the Reality where the distance between Ideal Scenario and the purple line represents the Processing-Event Time Lag and is always positive.

Event Order

There are additional complexities connected to these two Time Domains when processing unbounded data streams.

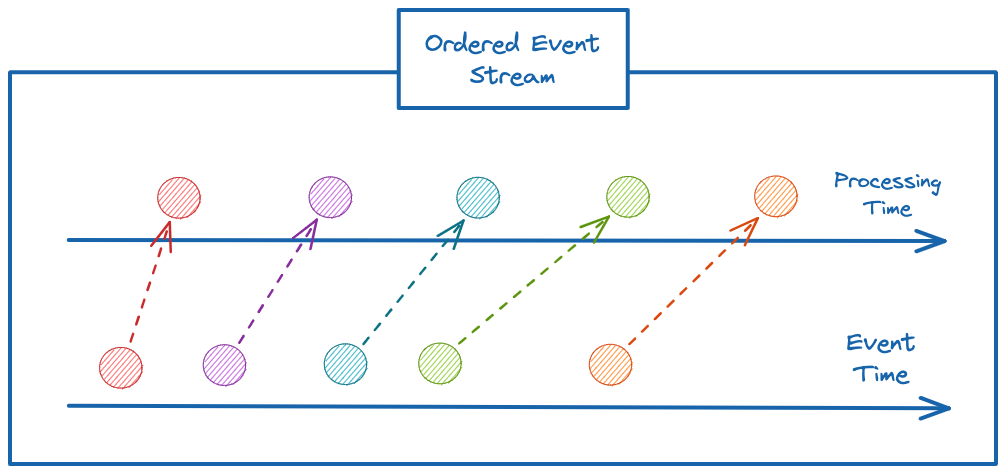

One of them concerns the ordering of the events ingested into the Streaming System. Similarly like in previous case - even if there is a lag between Processing and Event Time the ideal case would be if the Events reached the Data System in order they happen like displayed in the following graph:

Unfortunate truth once again is that the events will be reaching your Streaming Data Systems in an unordered fashion like displayed in the following graph:

We need to be able to deal with this un-order and will look into how to do that in Stream Processing Systems in future Episodes.

Few weeks ago I had a chance to chat with Thomas Bustos about MLOps and Data in general in his podcast!

I had an amazing time discussing both technical and career progression related topics.

Definitely check it out 😊

Join SwirlAI Data Talent Collective

If you are looking to fill your Hiring Pipeline with Data Talent or you are looking for a new job opportunity in the Data Space check out SwirlAI Data Talent Collective! Find out how it works by following the link below.