SAI #25: Twitter's Recommender System (The Algorithm)

Review of recently open-sourced Twitter's recommender system.

👋 This is Aurimas. I write the weekly SAI Newsletter with the goal of presenting complicated Data related concepts in a simple and easy-to-digest way. The goal is to help You UpSkill in Data Engineering, MLOps, Machine Learning and Data Science areas.

Recently, Twitter has open-sourced its Recommendation Algorithm. I have previously covered what a general Recommender System Design looks like. With Twitter’s algorithm secrets now available, it is the perfect time to see how this real-life example fits on the general map.

General Recommender System Design

The goal of a Recommender System is to return a list of recommended items given a certain context. It could be a search query on an e-commerce website or a list of Tweets on your Twitter timeline.

The procedure in real-world setups usually consists of two steps:

Candidate Retrieval.

Offline (Training) part:

➡️ We train embedding model that will be used to transform inventory items into vector representations.

➡️ Deploy the model as a service to be later used for real-time embedding.

➡️ We apply previously trained embedding model to all owned inventory items.

➡️ Build an Approximate Nearest Neighbours search index from the embeddings.

➡️ Define additional filtering rules for retrieved candidates (e.g. don’t allow heavy metal songs for children under seven years old).

Online (Deployment) part:

➡️ Once an item comes, we embed it into a vector representation using the model from the offline part.

➡️ Use Approximate NN index to find the n most similar vectors.

➡️ Apply additional filtering rules defined in the offline part.

Candidate Ranking.

Offline (Training) part:

➡️ We build a Feature Store for item-level features and expose it for real-time feature retrieval.

➡️ Train a Ranking Model using the Features.

➡️ Deploy the model as a service to be later used for real-time scoring.

➡️ Define additional business rules that would overlay additional scores (e.g. downscore certain brands).

Online (Deployment) part:

➡️ Chaining from the previous online step, we enrich retrieved candidates with features from the Feature Store.

➡️ Apply the Ranking model for candidates to receive item scores.

➡️ Order by scores and apply additional business rules on top.

✅ By chaining both Online parts of Candidate Retrieval and Candidate Ranking end-to-end, we get a recommendation list that is shown to the user.

✅ Results and user actions against them are piped back to the Feature Store to improve future Ranking Models.

Twitter’s Recommender System

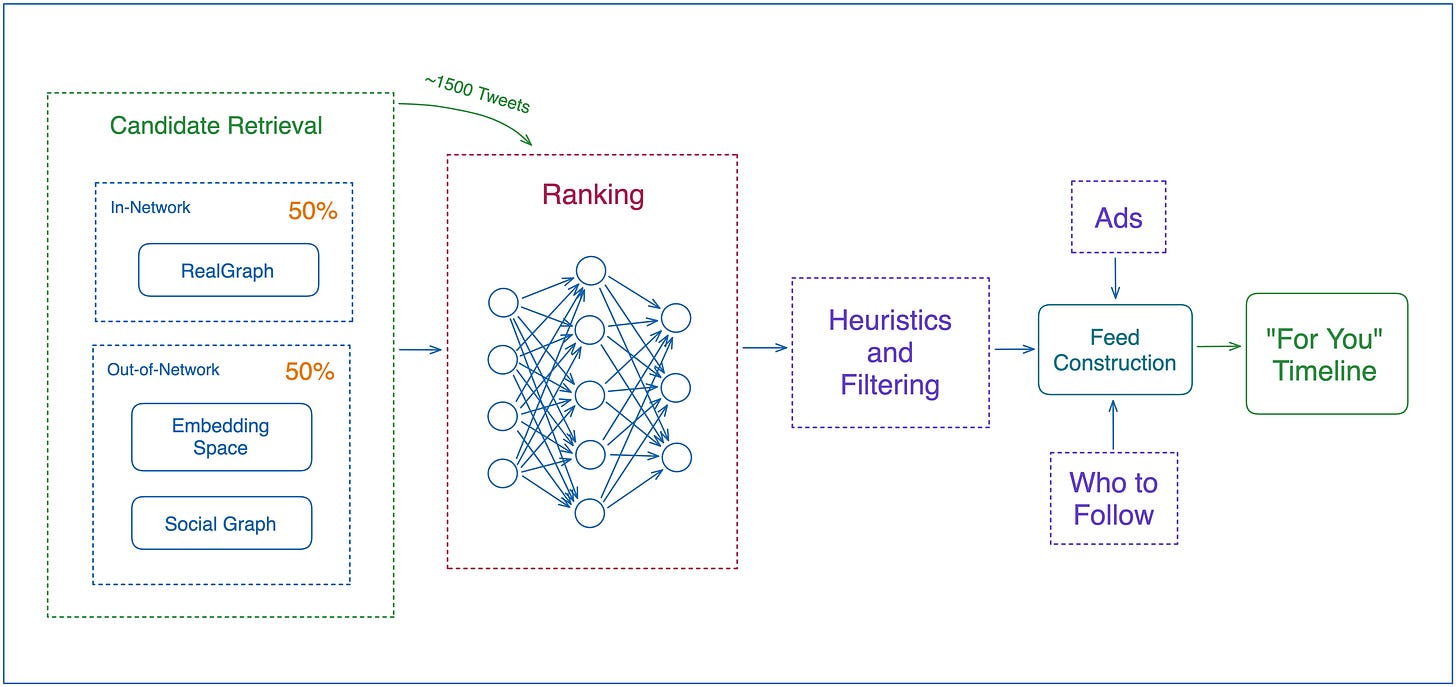

As expected, it follows a similar design to a general Recommender System and consists of four main stages.

1. Candidate Retrieval.

The Candidate Retrieval stage is the most exciting part of the entire chain. Twitter splits candidate sources into two parts:

𝘐𝘯-𝘕𝘦𝘵𝘸𝘰𝘳𝘬 𝘚𝘰𝘶𝘳𝘤𝘦𝘴.

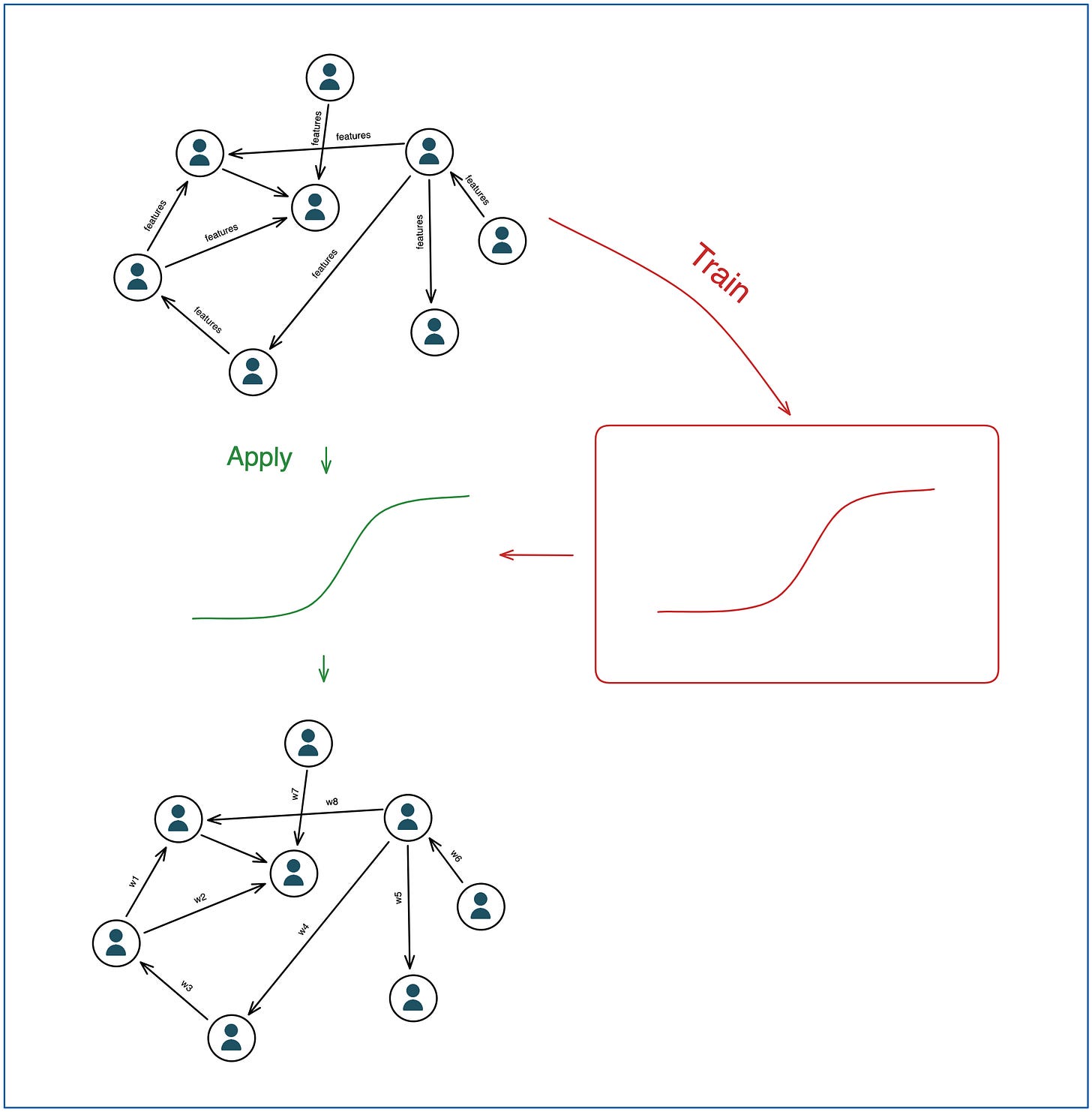

This is where tweet recommendations from the Twitter users you follow are generated. The main driver mechanism used is the RealGraph. In short, it is:

➡️ Directed weighted graph.

➡️ Twitter users are represented by Nodes of the graph.

➡️ Interactions between users are represented as Edges of the graph. If user A interacted or is following user B, the edge will be directed from A to B and wise versa.

➡️ Edges and nodes have features assigned to them.

➡️ These historical features are used separately to train a Logistic Regression Model to estimate the probability of a future directed interaction.

➡️ By using the previous model, a weight is given to each Edge.

➡️ The graph can then be used for multiple use cases.

➡️ The graph is rebuilt each day, so if you started interacting with a user today, you might expect his tweets start coming your way the next day.

➡️ Stale/inactive interaction Edges are periodically deleted.

✅ The higher the probability of you interacting with the tweet of a user, the higher the likelihood it will be chosen by the candidate retrieval stage.

𝘖𝘶𝘵-𝘰𝘧-𝘕𝘦𝘵𝘸𝘰𝘳𝘬 𝘚𝘰𝘶𝘳𝘤𝘦𝘴.

This is where tweet recommendations from users that you do not follow are coming from. The Out-of-Network candidates are sourced from two segments:

Social Graph.

➡️ Estimates what tweets you might find relevant given the users you follow or users with similar interests are engaging with.

➡️ The main driver of this source is the GraphJet in-memory graph processing engine developed by Twitter.

➡️ GraphJet assumes that the entire graph fits into memory on a single server.

➡️ By traversing the interaction graph, GraphJet can answer two types of queries: content recommendation queries - for a user, compute a list of tweets that the user might be interested in, and similarity queries - for a given tweet, compute a list of related tweets. [Source 4.]

As useful as Social Graph is, it has been steadily replaced by Embedded Spaces approach.

Embedding Spaces.

➡️ This approach uses Tweet and user embeddings to find similarities between Users, Tweets, and user-Tweet pairs for a more general similarity search.

➡️ The mostly used Embedded Space at Twitter is called SimClusters. Here is a high-level overview:

Stage 1:

A. Similarity Graph between influencers is constructed.

B. Influencers communities are clustered into topics (e.g. AI, pop music, art etc.).

C. Each Twitter user is assigned one or multiple communities

Stage 2:

Each item on Twitter (Tweets, articles, users etc.) is then embedded into a sparse vector where ith element in the vector represents its popularity in the ith community. These vectors can then be used to perform nearest neighbours search in the community embedding space.

✅ Candidate Retrieval stage generates a list of around 1500 tweets that might be relevant to you. It is on average made up of half candidates from In-Network sources and half of Out-of-Network Sources.

2. Ranking.

➡️ Thousands of features from each of the 1500 candidates from the previous list is fed to a ~48 million parameter MaskNet [Source 3.] neural network which ranks their relevance to you.

➡️ Relevance is defined by positive engagement - think Likes, Retweets, Replies etc.

➡️ The NN outputs ten labels for each Tweet that are then used to calculate the Tweet score.

➡️ Tweets ordered by the output score is what you would see in the timeline but some additional filtering rules are applied in the next step.

3. Filtering Rules.

Or as Twitter calls them - Heuristics, Filters, and Product Features. After the Tweets are ranked, they are then filtered to take into account rules like (taken directly from the blog post that announced open sourcing of the algorithm [Source 1]):

Visibility Filtering: Filter out Tweets based on their content and your preferences. For instance, remove Tweets from accounts you block or mute.

Author Diversity: Avoid too many consecutive Tweets from a single author.

Content Balance: Ensure we are delivering a fair balance of In-Network and Out-of-Network Tweets.

Feedback-based Fatigue: Lower the score of certain Tweets if the viewer has provided negative feedback around it.

Social Proof: Exclude Out-of-Network Tweets without a second degree connection to the Tweet as a quality safeguard. In other words, ensure someone you follow engaged with the Tweet or follows the Tweet’s author.

Conversations: Provide more context to a Reply by threading it together with the original Tweet.

Edited Tweets: Determine if the Tweets currently on a device are stale, and send instructions to replace them with the edited versions.

4. Feed Construction.

➡️ Finally, your “For You” timeline is constructed by blending ranked and filtered Tweets, ads, Follow Recommendations etc.

➡️ Twitter claims this pipeline to be executed 5 billion times per day which is definitely impressive!

Summing up.

As expected, Twitter’s Recommendation algorithm can be placed on a map of General Recommendation System Design.

Given the nature of Twitter, it is not surprising that candidate retrieval procedure relies a lot on graph algorithms.

It looks like Twitter is moving towards leveraging Embedding Spaces more and more when it comes to Out-of-Network Tweet candidate generation.

Interestingly, Twitter does not apply any Filtering Rules before Ranking procedure. It would make sense to do the filtering prior and after as it would reduce the noise that is fed to the neural network that ranks the candidates.

Two sets of filtering would be needed. The prior one could only remove malicious content that is globally agreed to be so before training the neural network.

Sources:

https://blog.twitter.com/engineering/en_us/topics/open-source/2023/twitter-recommendation-algorithm

https://www.ueo-workshop.com/wp-content/uploads/2014/04/sig-alternate.pdf

Join SwirlAI Data Talent Collective

If you are looking to fill your Hiring Pipeline with Data Talent or you are looking for a new job opportunity in the Data Space check out SwirlAI Data Talent Collective! Find out how it works by following the link below.