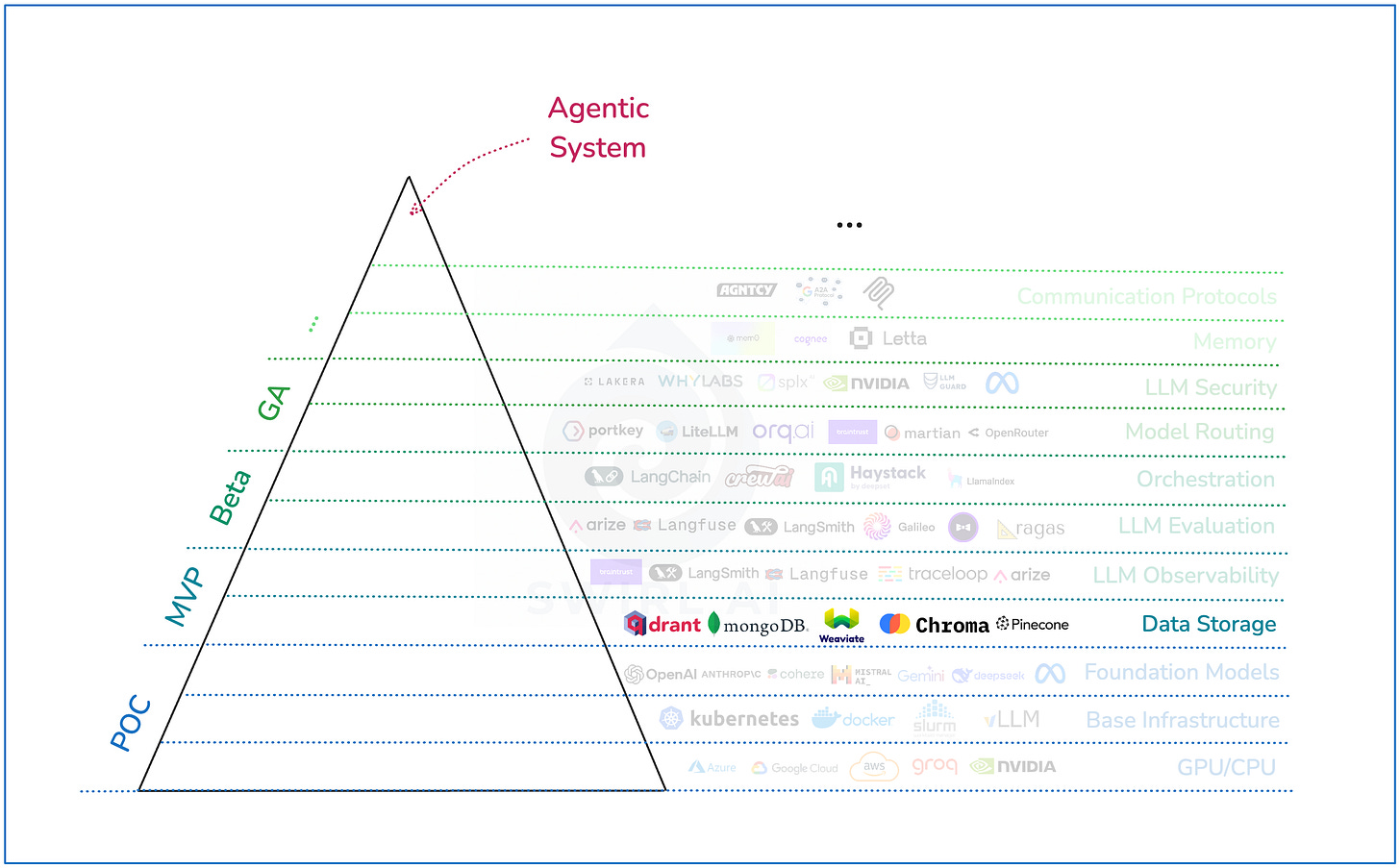

Enterprise Agentic AI Hierarchy of Needs

The crucial layers of infrastructure that make up a production grade Agentic AI system.

👋 I am Aurimas. I write the SwirlAI Newsletter with the goal of presenting complicated Data related concepts in a simple and easy-to-digest way. My mission is to help You UpSkill and keep You updated on the latest news in AI Engineering, Data Engineering, Machine Learning and overall Data space.

In today’s episode I want to share with you one of the frameworks that I have designed. It helps me think about adding complexity to the infrastructure needed to power Agentic Systems that we are building for enterprises. I hope it will help you as well.

In the post you will find:

The Cowboy Agentic AI Hierarchy of Needs.

The Enterprise Agentic AI Hierarchy of Needs.

Different layers of infrastructure needed to power production grade Agentic Systems.

Problems that these layers are meant to solve and some notable vendors that are tackling them.

The framework has evolved throughout the time. I will start with the initial version.

The Cowboy Agentic AI Hierarchy of Needs.

I started contemplating the Framework I am about to introduce to you close to when ChatGPT was launched a few years ago. It was inspired by the conversations I had with companies that were building agentic applications back then, on the first available LLM APIs.

The idea behind the Agentic AI Hierarchy of Needs framework is simple but useful:

While working on Agentic Systems you want to build and ship them fast.

What are the minimal requirements for Agentic AI specific tooling and infrastructure you can go away with as you progress in the lifecycle of your product:

POC → MVP → Beta → GA → …

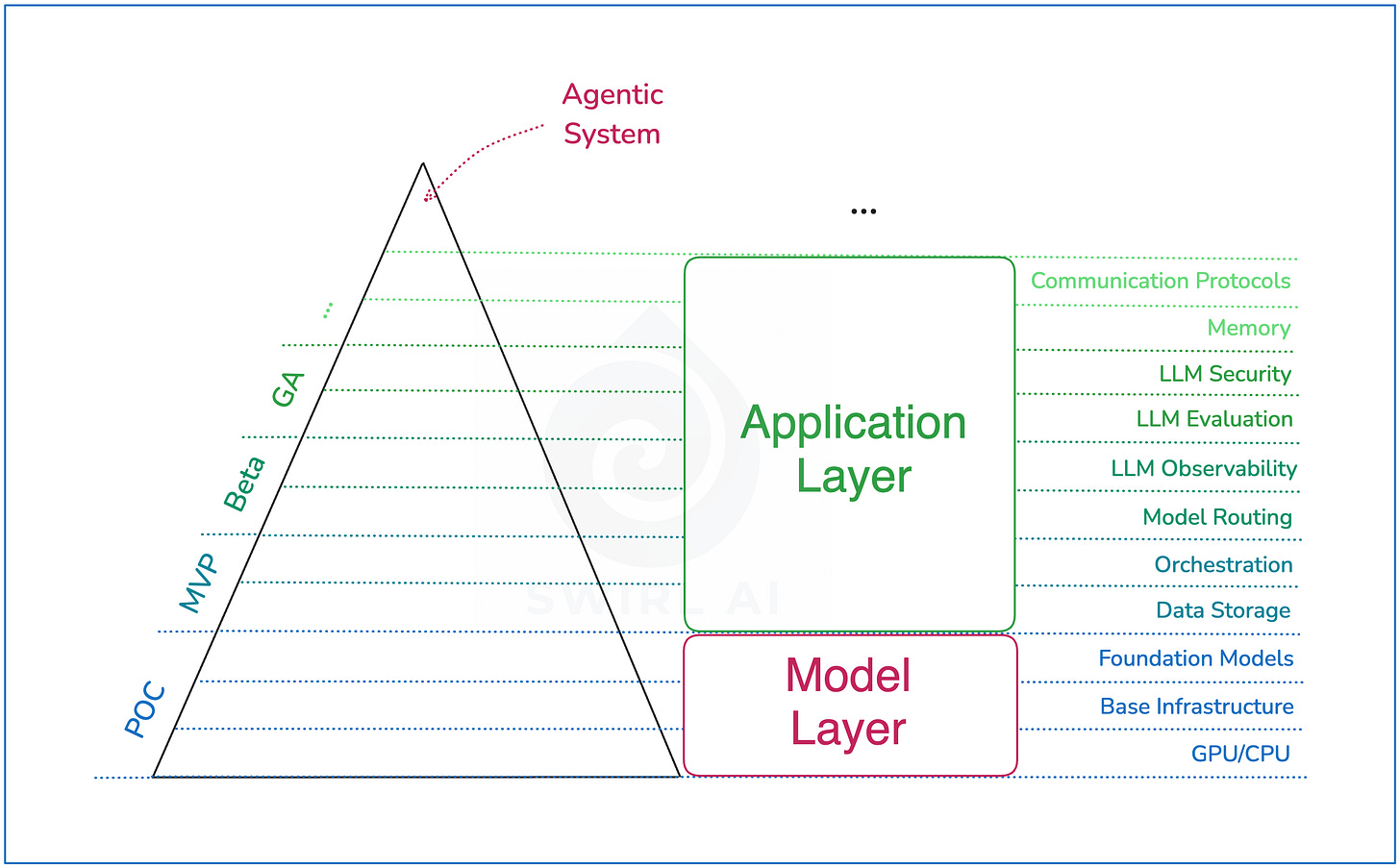

Here is the image representing the importance of each infrastructure layer I observed back then (it is based on how companies would approach adding additional tooling as they developed the product).

Here is a short description of the diagram, we will go into some layers of the infrastructure in more details as we progress through the blog post.

Model Layer:

CPU/GPU hardware: In most cases we are taking this for granted as it is being handled for us builders.

Base Infrastructure: The next layer of infrastructure needed to handle LLM deployment and serving.

Foundation Models themselves: In most cases provided by the big AI Labs.

We can start prototyping and build the simplest applications and reach POC level by only having this.

You can read more about the best practices of moving from POC to MVP etc. here:

Application Layer:

This is where the most business value is created - continuous development of applications powered by GenAI.

To reach a reliable MVP we usually needed:

Data Storage.

LLM Orchestration.

Beta:

Model Routing.

LLM Observability.

GA:

LLM Evaluation.

LLM Security.

AI Agent Memory.

AI Agent Communication Protocols.

I am now calling this the Cowboy Agentic AI Hierarchy of Needs. This is because back then close to none of the startups I worked with cared about the quality of the application compared to how much emphasis was put on the fact that the application had to work at scale and never go down.

Of course, everyone wanted VC money flowing in. The applications would crumble due to quality issues, however new customer inflow would still be higher than churn, so that was ok.

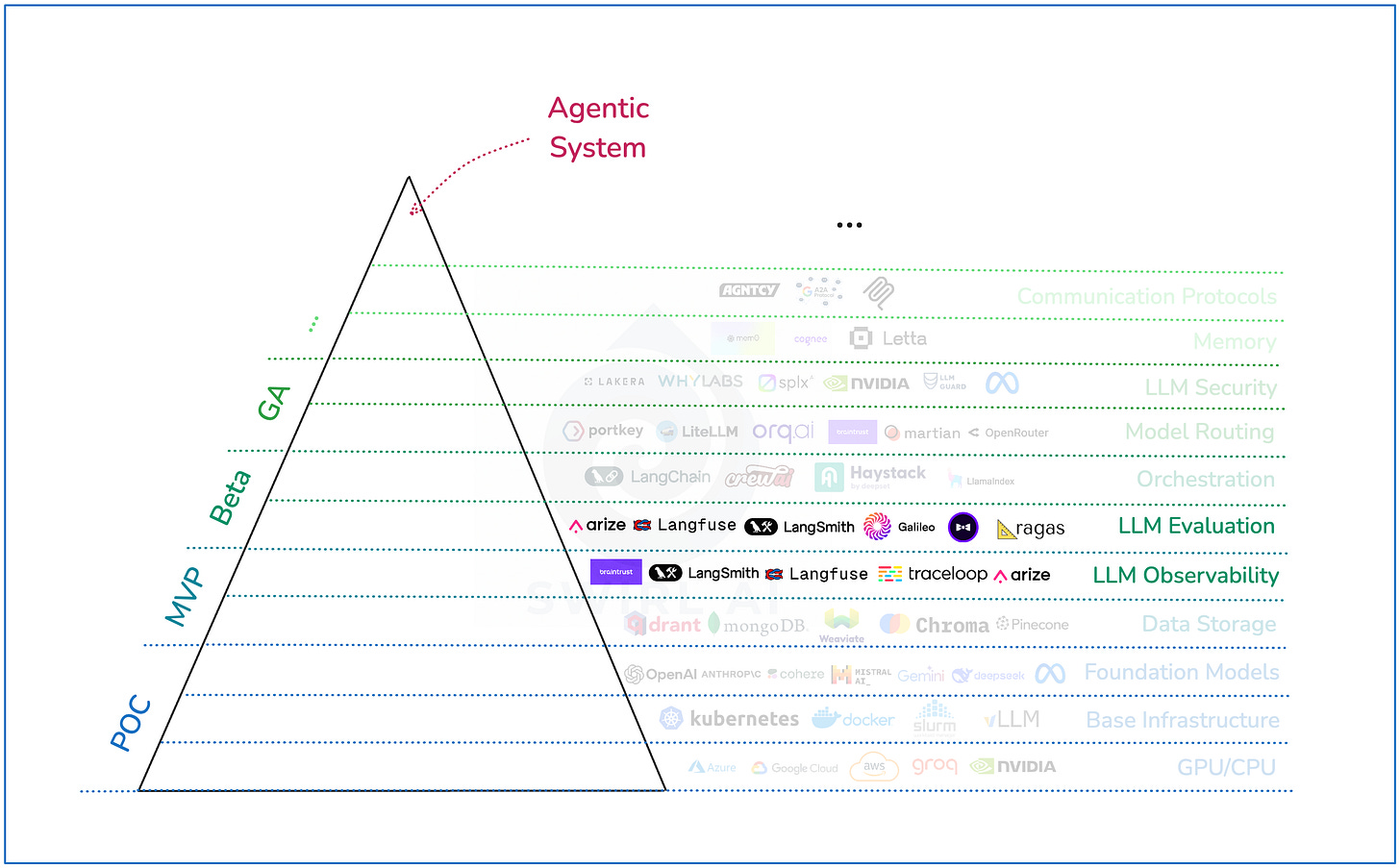

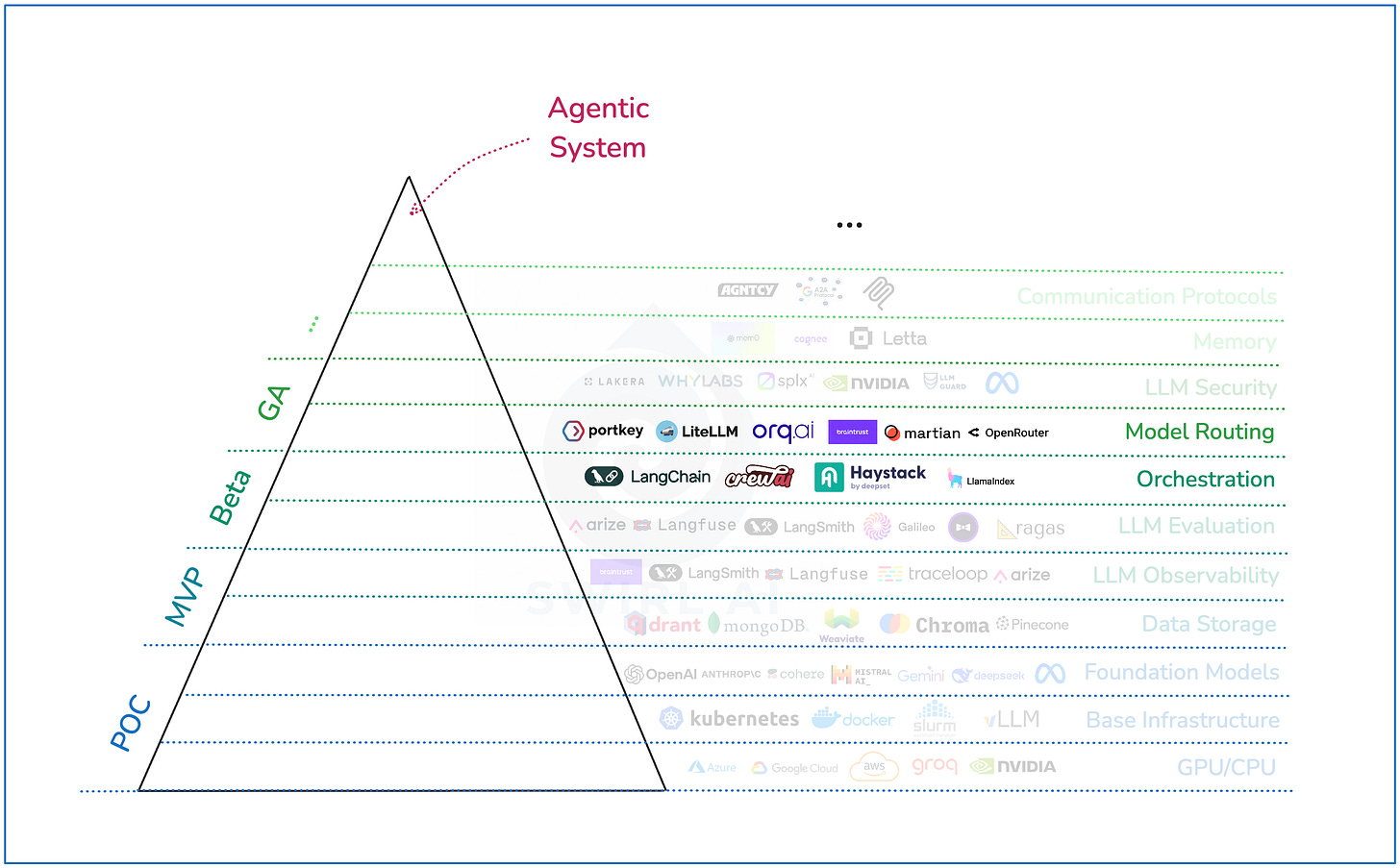

The Enterprise Agentic AI Hierarchy of Needs.

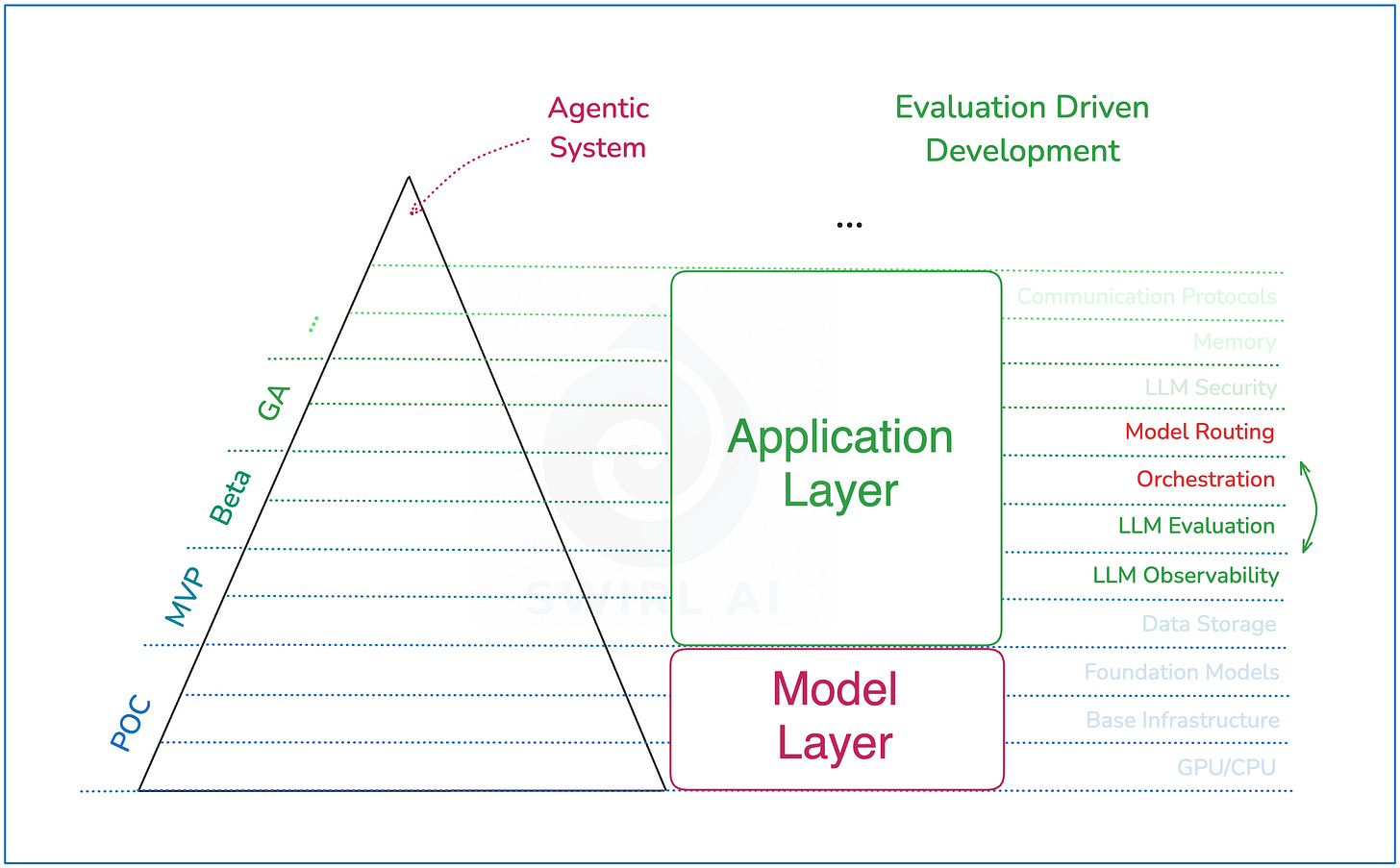

I still consult startups, but a lot of focus has shifted towards enterprise grade agentic applications in the last year plus. In general, the Cowboy Agentic AI Hierarchy of Needs did not stood the test of time and quality needed for the applications being shipped to production.

The shift was expected - LLM Observability and Evaluation had to take the central picture, pushing even Orchestration to the less important place in the Hierarchy of Needs.

Almost in all cases we are setting up the Observability foundations first before adopting any LLM Orchestration framework available in the market. Sometimes the adoption of these frameworks would not happen at all (depending on the complexity of the project/product) as simple wrapper clients like instructor are enough and the chaining can be easier implemented without a dedicated framework.

Also, I’ve noticed a trend of organisations dropping LLM Orchestration frameworks with the application they are building becoming more and more complex. This happens because the need for low level control becomes more important which is in most cases hidden away from the user by the frameworks and can become hard to reach.

Again, you can read more about Evaluation Driven Development shift here:

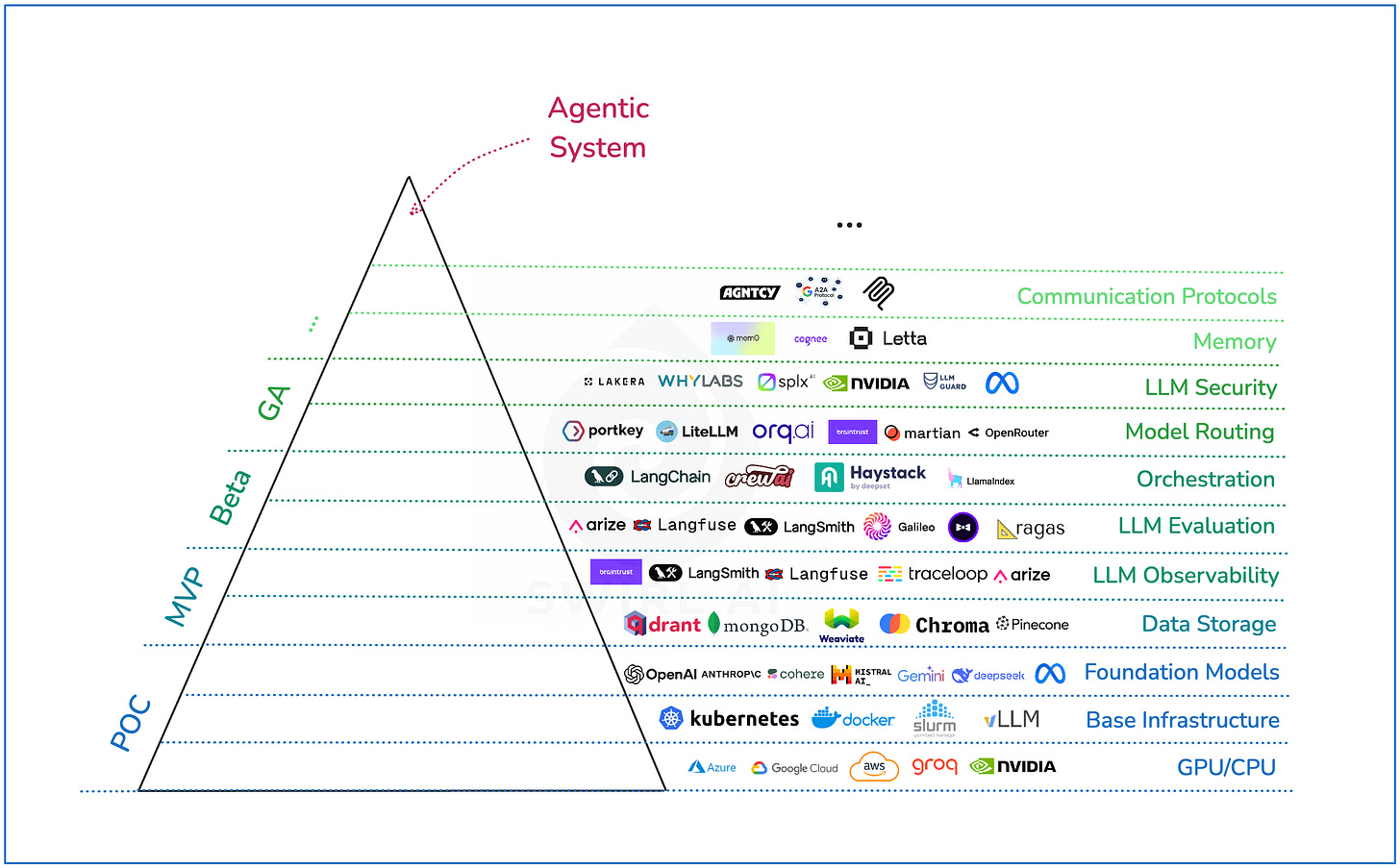

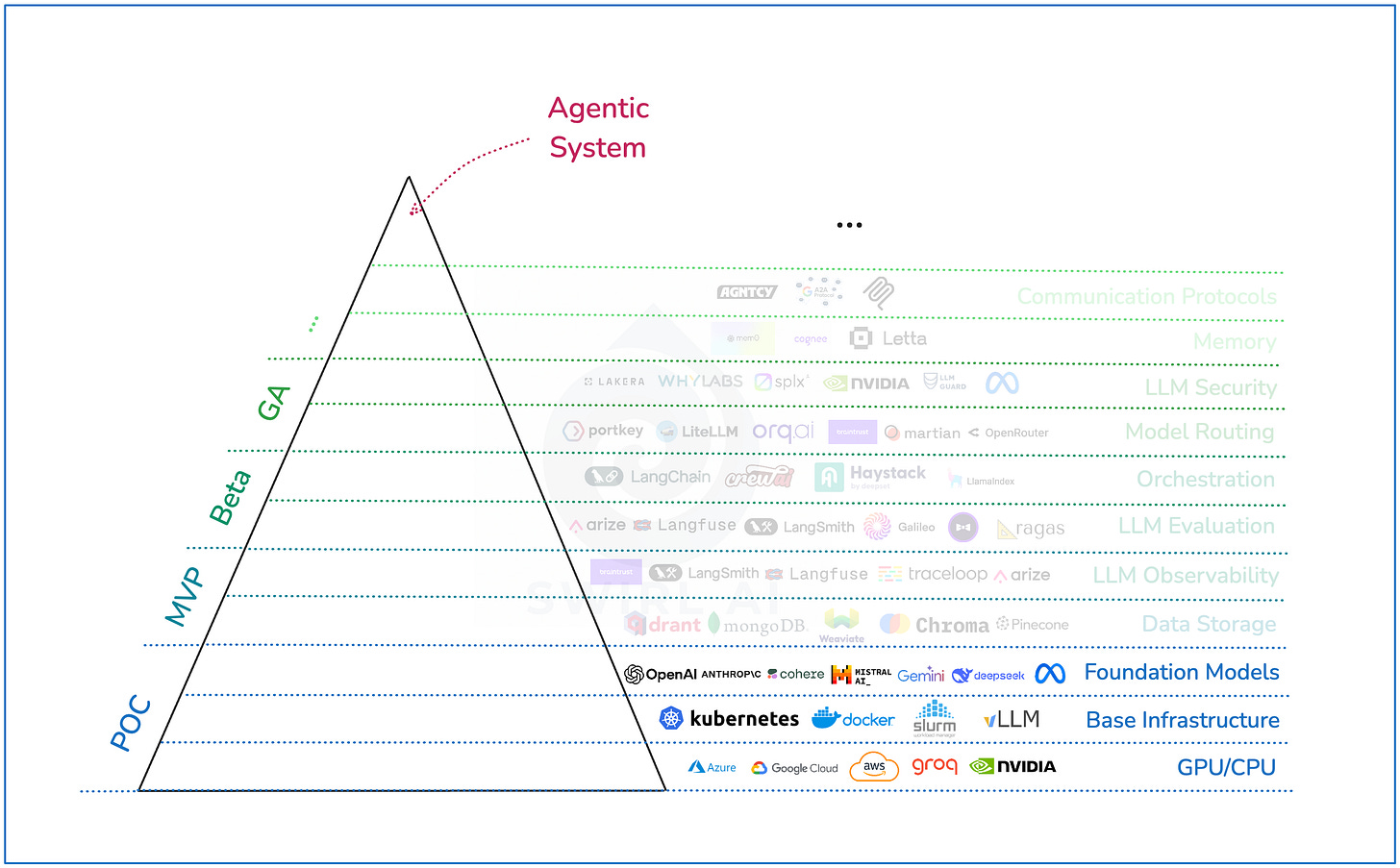

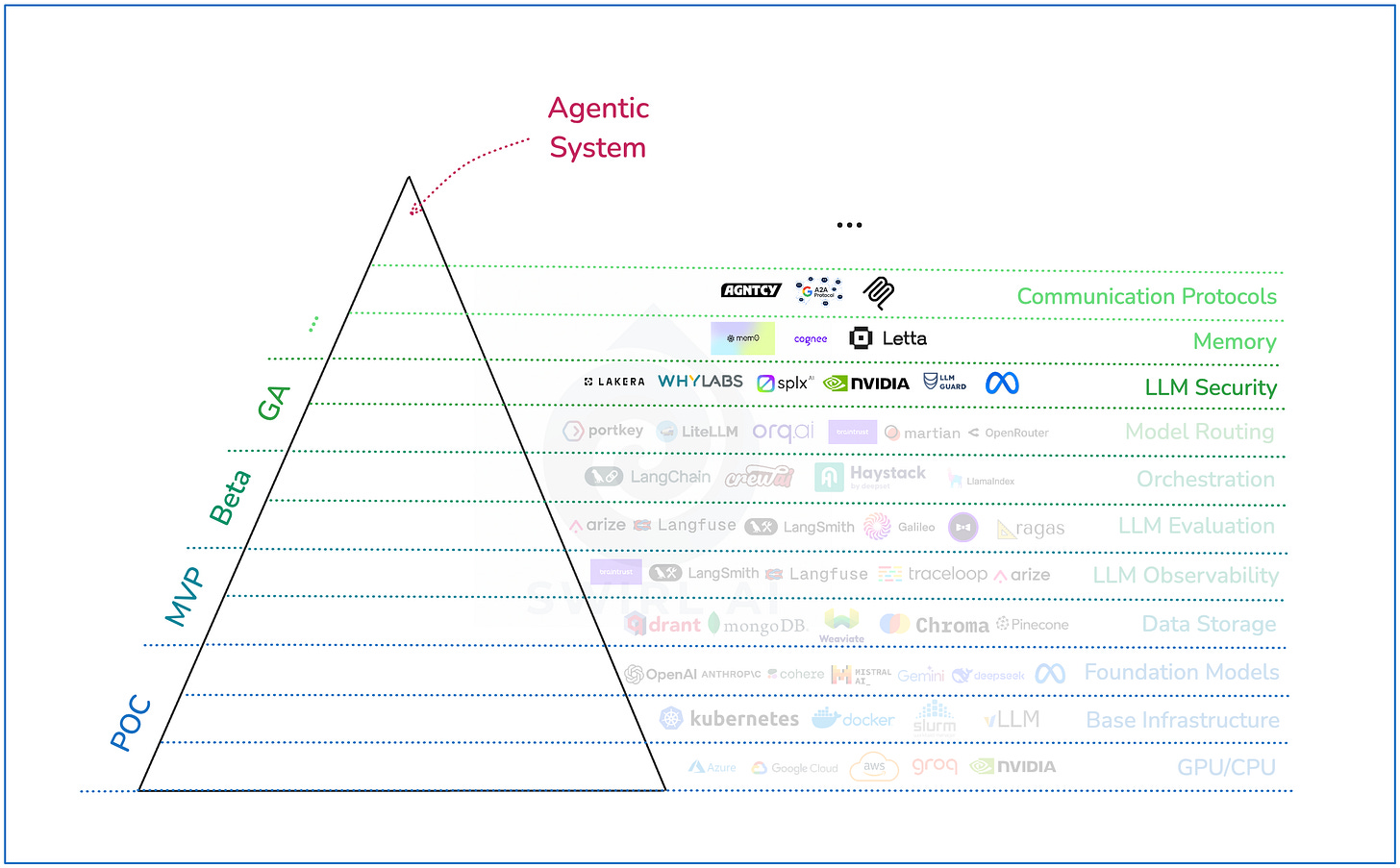

The Vendor Landscape.

The good thing about AI hype is that so many platforms have been and are still being created to help with operations in each layer of the infrastructure stack. The picture below displays just a handful of more popular ones in the market. I believe it is less that 10% of the players that exist.

I am planning to start curating an up to date market map, stay tuned for more info and feel free to reach out if you want to be included and you believe I could miss you. You can reach me at aurimas@swirlai.com.

Now, let’s go deeper into some of the infrastructure layers defined and discuss what problems they are trying to solve.

The Model Layer.

The model layer can be split into 3 parts:

GPU/CPU Hardware.

Base Infrastructure.

Foundation Models.

GPU/CPU Hardware.

The problem: Accelerated compute R&D, manufacturing and supply.

Some notable vendors/frameworks:

R&D and manufactoring: NVIDIA, Groq, Google, AWS.

Supply: NVIDIA, Groq, Google, AWS, Azure, Coreweave.

Extra notes: interestingly, with the increase in compute requirements for inference (both, regular serving and test time compute) even the big clouds are not capable to keep up with demand.

Base Infrastructure.

The problem: efficient and scalable model deployment on single and multi-node clusters.

Some notable vendors/frameworks:

vLLM.

Kubernetes.

Slurm.

Extra notes: Vendors providing both Proprietary and Open Model APIs are leveraging this infrastructure for serving the models for the public.

Foundation Models.

The problem: the need for general, task specific and multi-modal models increasingly capable of solving difficult problems with high precision.

Some notable vendors/frameworks:

OpenAI.

Anthropic.

Google.

Mistral.

Open Source community.

The Application Layer.

Data Storage.

Internal data is considered to be the main differentiator for companies that build Agentic Systems nowadays.

The problem: Most production ready enterprise Agentic Systems rely on internal context available within the enterprise. There is a requirement for integration with variety of data sources and efficient capabilities for retrieval of this data.

Some notable vendors/frameworks:

Qdrant.

Weaviate.

MongoDB.

Extra notes: Vector databases are just a piece of the puzzle. Efficient information retrieval systems should be comprised of relational, graph, key value, document and vector databases. It all depends on the use case and as an AI Engineer you should not always default to vector.

Observability and Evaluation.

Observability.

The problem: Agentic Systems are non-deterministic and can seem like black boxes from the outside. We need to be able to efficiently trace all of the actions that are happening within the application by instrumenting the code and then perform analytics on the traced data. Also, there is a need for LLMOps practice implementation like prompt versioning. It should also happen on the Observability layer.

Some notable vendors/frameworks:

LangSmith.

Langfuse.

Arize.

Extra notes: Observability platforms often come with their own instrumentation SDKs. Some Evaluation as well as Versioning capabilities are part of these platforms too.

Evaluation.

The problem: Agentic Systems are non-deterministic, there is a need manage exact and non-exact evaluation rules that would be ran against the data produced by the system. It is the only way to make sure that the system you are building and evolving behaves as expected.

Some notable vendors/frameworks:

Ragas.

Arize.

Galileo.

Extra notes: While the vendors do provide some out of the box evaluations, in most cases you will have to define your own evaluation rules. Also, you can’t blindly rely on evals defined in different platforms - even though the naming of the eval rules can match, the implementation is most likely different.

Orchestration and Model Routing.

Orchestration.

The problem: Agentic Systems often take form of complex non-deterministic chains of LLM or other GenAI model calls. There is a need of frameworks that would help developers quickly build these systems and manage the complexity as they are being evolved.

Some notable vendors/frameworks:

LangGraph.

CrewAI.

LlamaIndex.

Extra notes: Very often simple wrapper clients like instructor are enough to start off without the need to adopt any dedicated LLM Orchestration Framework. Also, these frameworks hide some low level implementation details that you would want to tweak to achieve the best performance of your application so it might make sense to drop the framework when your application becomes complex enough. Having said that, frameworks like LangGraph are moving in the right direction by allowing low level tweaks if needed.

Model Routing.

The problem: Not all model APIs that you will be using will be stable enough to handle your production traffic. There is a need for routing layer that would allow falling back to a different model provider if there are issues with the main one.

Some notable vendors/frameworks:

LiteLLM.

OrqAI.

Portkey.

Extra notes: properly switching between models is harder that it might look. As an example, a prompt that works for OpenAI might not work well for Claude family of models. Together with the fallback models you should configure fallback prompts. You should also closely tie Routing with Observability.

LLM Security, Agent Memory and Communication Protocols.

Security.

The problem: Real Agentic Systems have agency over some of our internal systems (e.g. data retrieval, automated ticket creation etc.). Malicious actors can manipulate natural language based interfaces to extract sensitive data or perform unintended actions within your infrastructure. We need safety guardrails that can prevent this and help us identify existing vulnerabilities.

Some notable vendors/frameworks:

splxAI.

Lakera.

WhyLabs.

Extra notes: LLM Security can be split into multiple categories like:

AI Application Red Teaming - continuous attempt to jailbreak your application.

Guardrails - making sure that no unexpected data reaches an LLM or is exposed to the user of your application. E.g. PII data.

Agent Memory.

The problem: Effective reasoning and planning capabilities of Agentic Systems strongly rely on the actions that the system has already taken as well as on the context available to the organisations internally and externally. We are used to modelling this memory by splitting it into short-term and long-term. There is a need of a layer that helps efficiently manage and retrieve relevant memories on-demand. You can read more about the types of memories in Agentic Systems here:

Some notable vendors/frameworks:

mem0.

Cognee.

Letta.

Extra notes: These memory layers are not just databases, but rather frameworks of for efficient memory management and retrieval.

Communication Protocols.

The problem: As we are entering the era of IoA (Internet of Agents) where AI Agents are distributed over the network and developed by different organisations, we need standards of how the communication between these systems should be handled.

You can read more about how MCP and A2A protocols work here:

Some notable vendors/frameworks:

MCP.

A2A.

Agentcy.

Extra notes: Open protocols for Agent communication are important but they are just a piece of the picture, there will be a need for standards that govern all of the existing protocols and other missing pieces. E.g.

How do we standardise tracing and Observability in multi-agent IOA systems?

How do we retain the identity of the running job of an AI Agent instance if the communication standard is not unified in different parts of the pipeline?

Summary.

Agentic Systems might look simple from the outside, but it takes a lot of effort to bring them too production reliably.

Proliferation of Vendors in the AI space due to the heat of the market is an amazing benefit we get as builders.

You should carefully think about the minimal amount of additional infrastructure elements as you move along the maturity of your AI application.

Adopting Evaluation Driven development is key. Especially when building for enterprises.

Hope you enjoyed this article and hope to see you next Wednesday!