SAI #12: CAP Theorem.

CAP Theorem, MLOps Maturity Model: Level 0.

👋 This is Aurimas. I write the weekly SAI Newsletter where my goal is to present complicated Data related concepts in a simple and easy to digest way. The goal is to help You UpSkill in Data Engineering, MLOps, Machine Learning and Data Science areas.

Hope you are having a great start of the year 2023!

Today we cover 2 new topics:

What is the CAP Theorem.

MLOps Maturity Levels by GCP: Level 0.

CAP Theorem

What is the CAP Theorem and why does it matter to you as a Data Engineer?

As a modern Data Engineer you are most likely to work in the Cloud utilising all kinds of Distributed technologies and Databases. Understanding CAP theorem will help you choose a Database that is fit for your application use case.

Let’s take a closer look.

First, let’s define what we mean by distributed:

➡️ A Cluster is involved.

➡️ Cluster is composed of multiple Nodes that communicate with each other through the Network.

➡️ Multiple Nodes could be deployed on a single Server but in most production setups you will see a Node per Server.

➡️ Data is distributed between multiple Nodes.

Now, let’s define what CAP stands for:

➡️ Consistency - All Nodes in the cluster should see the same data. If we perform a read operation it should return data of the last write regardless of which node we are on.

➡️ Availability - The Distributed System remains operational all of the time regardless of the state of individual nodes. The system continues to operate even with multiple nodes down.

➡️ Partition-Tolerance - If a Network Partition occurs (communication between some nodes in the cluster breaks) the Distributed System does not fail even if some messages are dropped or delayed.

The CAP Theorem states that you can only have a combination of two in the distributed system so your system is one of three:

👉 CA - Consistent and Available.

👉 AP - Available and Partition-Tolerant.

👉 CP - Consistent and Partition-Tolerant.

❗️ In real world you will not find many CA Systems as Network Partitions are common in Distributed Databases so they must be Partition-Tolerant. This means that we are usually balancing between AP and CP Systems.

Different databases are designed with different CAP guarantees. For example:

👉 MongoDB is a CP Database.

👉 Cassandra is a AP Database.

✅ Having said this, you can also configure consistency on application level in Cassandra but more on that in the future posts.

MLOps Maturity Levels

What are the MLOps Maturity Levels? How can you make use of the concept?

Both GCP and Azure have defined what they call the MLOps Maturity Models around the same time at the beginning of 2020.

It is useful to understand the concept as it can help guide you while evolving your ML Platform automation. More importantly - understand what level is enough for your use case.

You will hear the term being thrown around in conversations so it will help you navigate them.

We will analyze GCPs definition which comprises of 3 levels and look into how to best approach moving between each level.

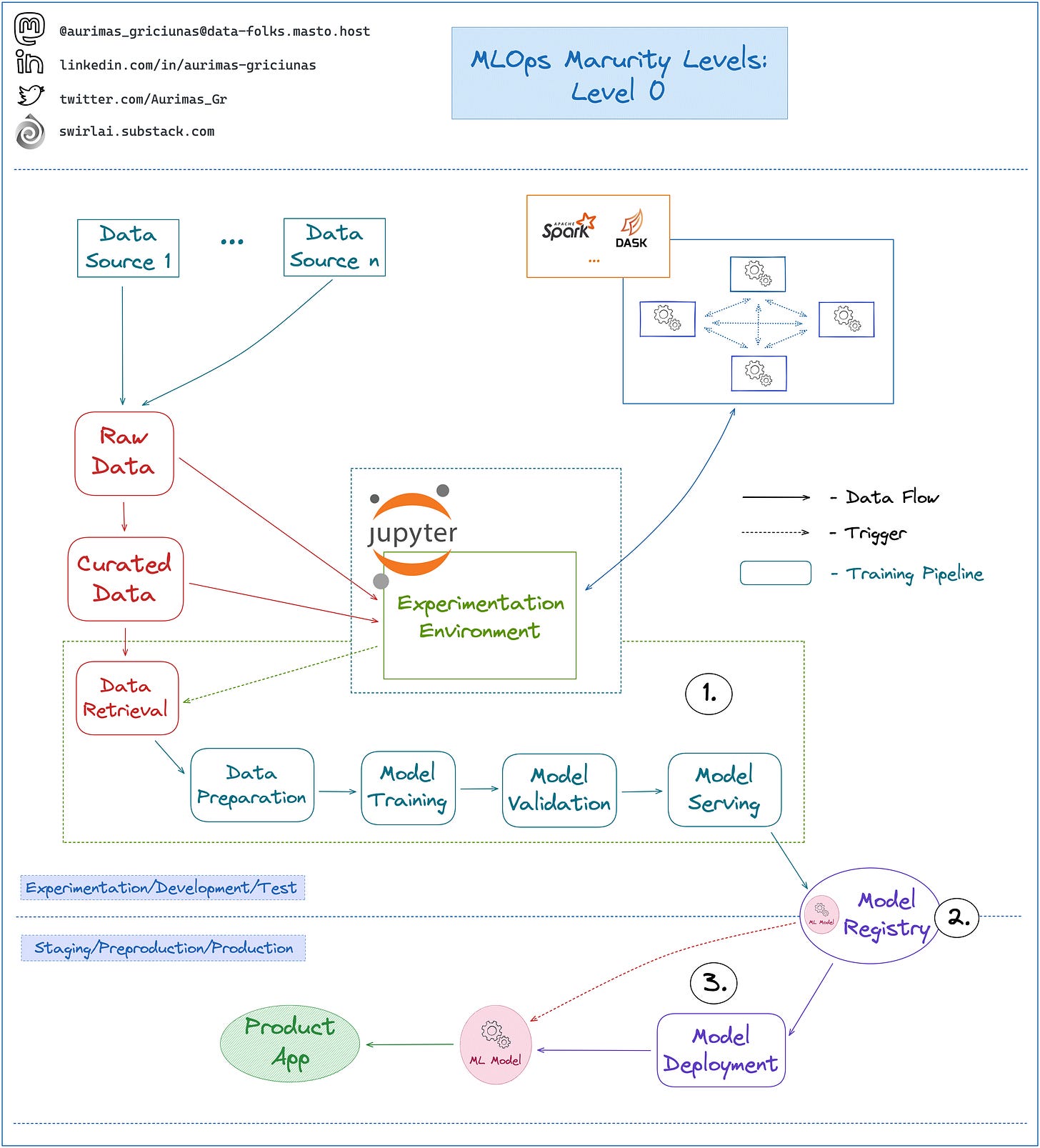

Today we look at Level 0:

The System is very simple and lacks any kind of automation. This is what you would see in most organizations that are just starting with running ML in Production.

1: The entire Machine Learning Pipeline is executed in the Experimentation Environment manually and on demand. Retraining is not happening often and the pipelines are not treated as an artifact and tested according to Software Engineering best practices.

2: After the Model Artifact is created it is saved into a Model Registry - it could be just a simple Object Storage or a more advanced Model Registry solution.

3: Software Engineers pick up the Model Artifact and use the already present Deployment procedures to expose the Model Service for Product Application to use.

✅ Extremely easy to set up:

👉 You only need a Notebook setup with access to the Data on different curation levels.

👉 ML is usually not the first use case to be deployed into Production which means that there is most likely a mature Software Deployment System already present in the organization.

✅ Extremely Fast for developing initial MVP Models for your business.

❗️ No Experiment Tracking System which means that comparing several experiments between each other becomes difficult. Also - reproducing a historical Model Training Run is near to impossible.

❗️ ML lifecycle lives in Notebooks that are complicated to track in code version control systems.

❗️ The System is extremely likely to suffer from Training/Serving skew as there is no connection between Offline and Online Data.

My thoughts on Level 0: it is ok to start here as long as you deliver value from your ML Models. What I would try to add as soon as possible is Experiment Tracking Systems in any complexity - it could simply be metadata stored in Object Storage or a Database. In future Posts we will look at Levels 1 and 2 and what steps you need to take to move there.

First of all - thanks for the substack!

I think it's not just OK to start at MLOps level 0, it's mandatory to start there! Even in well established company that have resources to invest in proper ML platform, I would start from this - the faster you can deliver value, the better it is for the ML function as whole.

I am not entirely sure why do you think this system is particularly likely to suffer from Training/Serving skew. The connection between Online and Offline data is the data lake - online data, becomes offline data. I agree that at this level the skew is not monitored, but data scientist will have an idea about this from his EDA - if there are big changes in data distribution in his training data, there will be changes when serving a model. What I am missing here? :)

I would also like to know what do you think about the deployment options - you write that software engineers pick up the artefact to be exposed in Model Service. Several thinks interest me here:

1. What do you have in mind with "model artefact"? Is this just a trained ML algorithm (e.g. XGBoost) that has it's own serialisation / deserialisation format and can be loaded in different languages? Or is it the entire Model class with other business logic (which can be substantial) serialised?

2. If it's just the trained algorithm, would you build other logic in service / app itself? Engineers should do it?

3. How do we think about the environment (especially in Python, as other might have "full jar" equivalents) in this case? Or in your opinion this is too complicated for level 0?

This topic - experiment to production - fascinates me so would be interesting to hear your thoughts. What you saw working and not in the past?

For example all the "managed endpoints" offered by the cloud providers to expose models are great when you are just starting, because they have a way to take care of most things, but they are also very restrictive in other ways and in my experience companies will outgrow them quite fast and will have to invest in setting something more complicated to keep productivity up.