SAI #28: Organisational structure for effective MLOps.

Let's look into how we should structure our organisations for effective MLOps practice implementation.

👋 I am Aurimas. I write the SwirlAI Newsletter with the goal of presenting complicated Data related concepts in a simple and easy-to-digest way. My mission is to help You UpSkill and keep You updated on the latest news in Data Engineering, MLOps, Machine Learning and overall Data space.

The value that adoption of MLOps practices brings to an organisation has now been widely understood and accepted. However, companies do struggle to adopt MLOps not only due to technical difficulties but also because of the organisational structures that are present at a given point in time.

Today I want to lay down some of my thoughts around how I think about approaching evolution of organisational structure for effective implementation MLOps practices.

The structure of ML Project.

Before delving deeper, we should understand how a regular Machine Learning project Life-cycle looks like. I find that a good mind map consists of a split into the following four steps.

Ideation

All begins at the ideation stage where business meets data professionals to raise a problem. Together, they try to answer questions like:

Do we need Machine Learning to solve the problem?

Do we have data assets needed to build the Machine Learning model?

Will acquiring such assets eventually result in a positive ROI?

Can Machine Learning Systems that are currently available handle the business use case when implemented?

…

Pro hint: If you do not need a ML Model to solve the given problem, then solve it without it as regular software projects are a lot less complex and will require significantly less investment.

Involved: Subject Matter Experts, Data Scientists, Data Engineers, Project Managers and more.

Experimentation

Once it is agreed that you want and more importantly - need to build the model - data scientists experiment with the available data assets and multiple ML Model architectures to build the PoC of the model which is handed over to the next stage. What is handed over here is a model binary that can be then embedded into different types of ML application deployments (Batch, Stream, Real-Time, Edge).

Involved: Data Scientists.

Deployment

The model is productionised by transforming it into a Machine Learning System - in modern MLOps this would usually mean a ML Pipeline and not only the model binary that is deployed into production environment as a first class citizen. This enables Continuous Training (CT).

Involved: Software Engineers or ML Engineers.

The distinction of who is involved at this stage is extremely important as the more mature ML Function becomes the more tendency there is for the deployment procedure to be handled by ML Engineers.

Monitoring

Monitoring Stage is where you track performance of your models running in Production. This is also where the feedback will be fed into previous stages creating the feedback loop we are extremely interested in.

Involved: Software Engineers or ML Engineers.

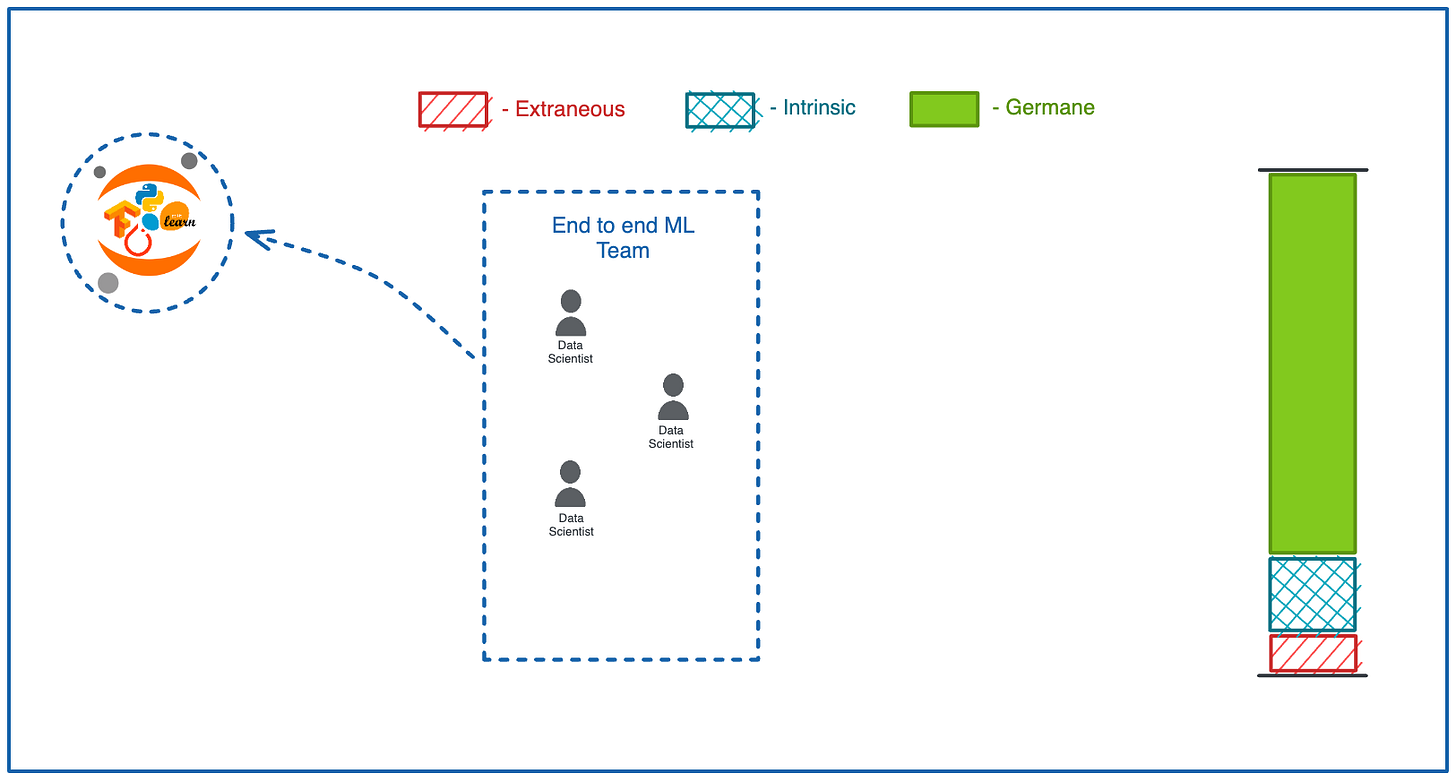

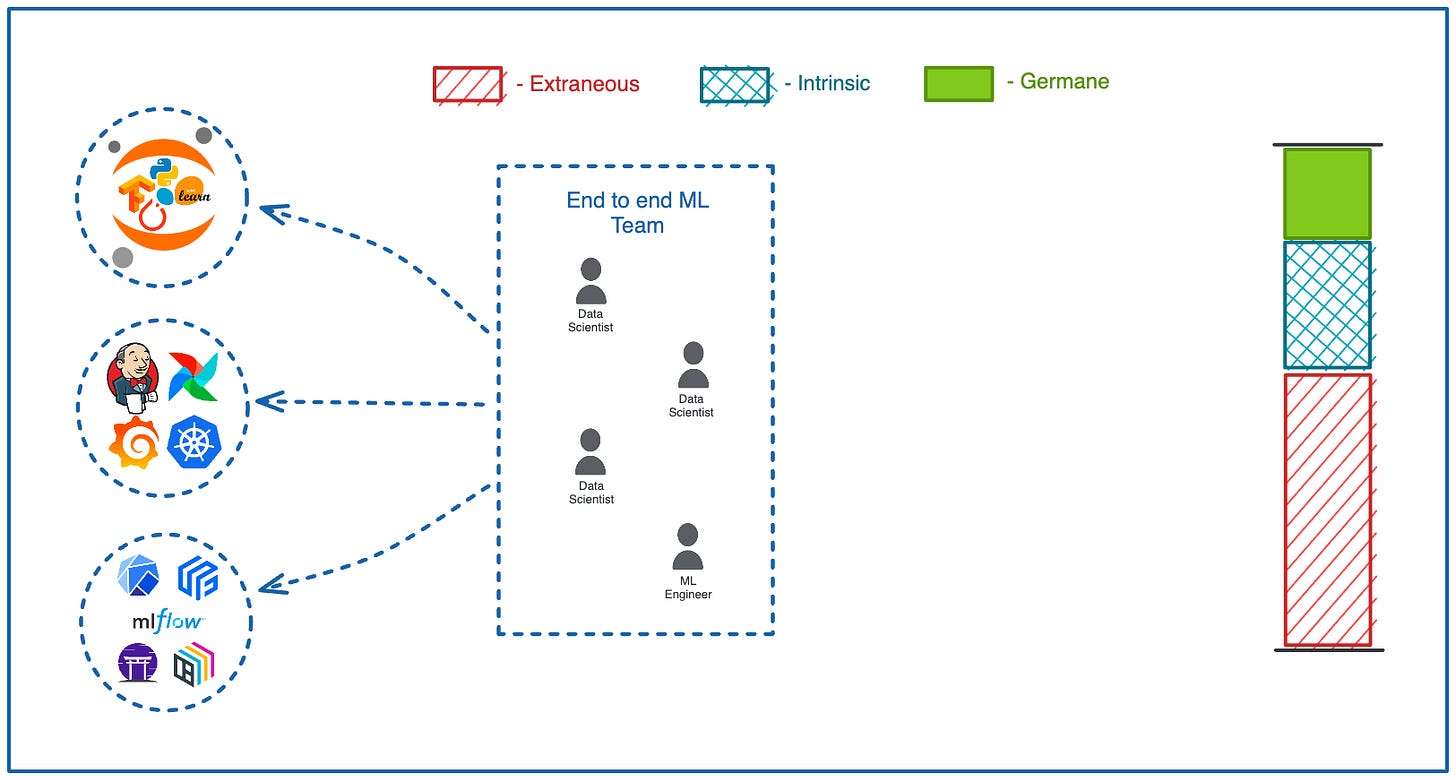

End-to-end Machine Learning Team

Modern Software Development practices advocate for end-to-end development flow contained in the boundaries of a single team. In this aspect, Machine Learning projects are no different. Both the handovers and feedback loops should be contained in a single team. This is why we should aim to have end-to-end ML Teams as a unit of ML product delivery.

From my experience, we can only achieve this by having ML Engineers and not Software engineers that are responsible for Deployment and Monitoring stage implementation as part of the end-to-end ML Teams.

Having said this, there are some constraints we introduce on these kinds of teams that we will continue to analyse in the following sections.

Cognitive load

Before we can fully understand the constraints we are placing on ML Teams by expecting them to own the end-to-end flow of Machine Learning Project, we need to understand what Cognitive Load is and how it impacts productivity of a team delivering Software.

Cognitive Load is defined as a mental capacity of your working memory that is being used up by a given task. It can be compared to the RAM of your computer, the more it fills up the less effective the system becomes. The same applies for the human brain - we all have limited Cognitive Capacity.

There are three types of Cognitive Load:

Extraneous: in Software and ML projects this is all about the environments in which you develop and deploy the product. Example: understanding the tooling that is needed to deploy the product, if you are also responsible for maintaining the tooling - it adds up.

Intrinsic: in ML projects this type of cognitive load relates to fundamental knowledge around solving the task. Example: understanding how to write ML Python code or available ML model architectures.

Germane: In ML projects this cognitive load encompasses what is needed to solve the actual business problem. Example: understand which ML model architecture should be used for a given problem, how to integrate the chosen architecture with data assets we have available.

Capacity available for Germane Cognitive load is what we should aim for. We can reduce Extraneous Cognitive load by automating tasks away, we can reduce Intrinsic Cognitive load by raising the seniority of the team.

As a ML Team you can end up in one of three situations.

A: The sum of Extraneous and Intrinsic cognitive loads exceeds the capacity. This is the worst situation to end up in as it prevents any creative work that is actually important from happening. No product is ever delivered.

B: Both, Extraneous and Intrinsic loads are high, but we have some space for Germane load. Such situation is clearly not ideal but happens in the industry very often. This is where we see overstressed employees as they struggle to deliver but somehow manage it with overtime.

C: Our goal should be to eliminate Extraneous cognitive load altogether. This is the ideal state we could achieve - Extraneous load is minimised and Data Scientists can focus on building and improving the product.

Evolution of Cognitive Load in ML Teams.

There is one pattern of how ML Teams tend to evolve that I have seen unfold many times in my career. I thought it makes sense to look into it closer and analyse how a combined Cognitive Load that is placed on the end-to-end ML Team evolves as the pattern nears its conclusion. This analysis might help you prevent any inefficiencies in your organisation when building up the Machine Learning function.

Stage 1: It all starts when there are no ML practices yet. It is your first ever ML project. Companies tend to hire one or multiple Data Scientists to deliver the project. Only ideation and experimentation is happening at this stage.

Extraneous and Intrinsic Load placed on the ML team is relatively low as Data Scientists experiment in Jupyter Notebooks using technologies they very well know. Capacity for creativity (Germane Cognitive Load) and experimentation is high.

Stage 2: Once we have a PoC model, we need to start deployment Stage. This is when we bring a ML Engineer into the team.

Intrinsic load does not shift too much because Data Scientists are allowed to continue experimenting and the ML Engineer takes over the Deployment part.

Extraneous Load increases non-significantly because:

Deployment infrastructure that is already present in the company is used.

This infrastructure is not ML specific so end-to-end ML team is not owning it.

ML Engineer can utilise all the automation already provided for deployment of regular Software.

Example tooling used: Kubernetes, Jenkins, Airflow, Grafana.

Stage 3: Companies understand, that infrastructure meant for regular Software deployment is not a good fit for effective ML Project delivery.

Reasons for this could vary: Pipelining and orchestration frameworks do not allow for reproducibility, no way to track ML metadata, lack of Feature Store etc.

This is when Machine Learning specific tooling like Kubeflow, MLflow, ZenML etc. is brought in.

The problem: the infrastructure is championed by the end-to-en ML team itself. ML engineer deploys and maintains it.

Consequence: Extraneous load placed on the team skyrockets. Development speed of the ML product slows down significantly.

Stage 4: Unfortunate next steps that organisations might take is increasing the headcount of the end-to-end ML team. As it doubles in size, additional projects are also assigned to it.

By doing so, companies not knowingly shoot themselves in the foot. While Extraneous load remains the same, we increase average Intrinsic load that is placed on members of the team. Why does this happen?

By ramping up the number of team members and placing more projects on the team we introduce unnecessary communication lines between the team members. This causes Data Scientists and ML Engineers to care about projects they would not be involved in if efficient team split would be in place.

If at this point you are not sounding alarms - you might be in trouble. Let’s see how we can fix the situation.

ML Platform Teams.

The very first thing you should do is split the team into two or multiple units, depending on how many ML projects you have. This will help with removing the unnecessary communication lines that have been introduced in the previous stage (allowing space for Germane cognitive load) and normalise the product delivery.

Tech stack can still be shared between the two teams. This shared setup should not live for too long though, as the tolling will inevitably start diverging as the use cases between teams start to differ and communication lines weaken.

The next step that should be taken is the introduction of ML Platform and ML Platform Team consisting of MLOps Engineers. The team takes over the tooling that was being maintained by ML Engineers and starts making the interfaces more convenient for the end-to-end ML teams to use.

By doing so, we remove most of the Extraneous Cognitive load from ML Teams. ML Engineers in the teams are now responsible for using infrastructure provided by ML Platform Team to stitch up any automation required for ML Product delivery.

Final picture.

We now come to the final picture and conclusions.

ML Product development has to effectively flow inside of a single end-to-end ML Team. Any handover should be eliminated and the feedback should also flow without any interruptions in the boundaries of the same team.

The main factor preventing this from happening is the Cognitive Load placed on such end-to-end ML Team.

We need a ML Platform Team that would support end-to-end ML Teams when implementing MLOps practices. By doing so we remove excessive Extraneous Cognitive load from the ML Team.

Inside of the end-to-end ML Team, Data Scientists are responsible on iteration over the ML Product while ML Engineers are responsible for stitching up the capabilities provided by ML Platform and providing automation to the ML Product.

[Important]: There is no free lunch. Platform teams come with a huge cost and they could even hurt the effectiveness of end-to-end ML Teams if not set up correctly. We will look into how to build an effective ML Platform and Team in the future newsletter episodes.

Join SwirlAI Data Talent Collective

If you are looking to fill your Hiring Pipeline with Data Talent or you are looking for a new job opportunity in the Data Space check out SwirlAI Data Talent Collective! Find out how it works by following the link below.

Loved the framing of team structure through the lens of cognitive load. Thank for the in-depth treatment of this topic.

Hi Aurimas,

Thanks for this reading. It's interesting the Cognitive load and the strategy to split the Data and ML Team. I wanna ask you how can We differenciate between ML engineer and MLOps engineer tasks?

I believe that's possible that some tasks could be confused to assign in the correct way according to the role.

Thanks a lot,

Best regards,

Michael