What is AI Engineering?

And what you need to break into the role.

👋 I am Aurimas. I write the SwirlAI Newsletter with the goal of presenting complicated Data related concepts in a simple and easy-to-digest way. My mission is to help You UpSkill and keep You updated on the latest news in GenAI, MLOps, Data Engineering, Machine Learning and overall Data space.

Recently there has been a lot of buzz around AI Engineering - AGAIN :) . I was a bit surprised as I had an intuition that throughout the last 2 years, after the hype of LLMs, the definition of the role would have settled by now. Also, working in ML infrastructure space, the discussions around AI Engineer ICP were often happening and it seemed clear what kind of profile we were talking about. I guess I was living in a bubble!

In this article I will outline my thoughts around the role of AI Engineer and how it evolved in the recent year. My goal is to make part of SwirlAI a one-stop shop for anyone who wants to break into the role or upskill as AI Engineer. Lets go!

A short outline of the article:

Evolution of AI Systems in the age of LLMs.

How is AI Engineering different from Machine Learning or Software Engineering?

What skills would you need to break into the role?

What is the future of AI Engineering?

Evolution of AI Systems in the age of LLMs.

My take is simple (and it might be perceived controversial to some). AI Systems did not change that much, the thing that has is that we now have LLMs that allow us to solve some additional complex tasks - as steps in the AI Systems pipeline - that we previously could not have. In general, LLM in an extremely versatile tool in your day-to-day, but what are the new key capabilities when it comes to building AI systems?

Planning.

Content extraction.

Content generation.

Code generation.

That is pretty much it. Is it powerful? Hell yes! Especially when combined with regular software and machine learning.

An AI System remains, well, a system of multiple components, some of them can be LLMs, some of them - code executions, some of them - just a good old classification model. Having said that, it is worth exploring how these new types of applications that are the synthesis of old and new have evolved, as AI Engineers are closely tied to their development and deployment.

If it was up to me I would rather use term AI Systems Engineer than AI Engineer as it would ground the term into what building a successful AI product is actually about. There are two main Engineering related roles involved in building out the end result, which is a productionised AI System:

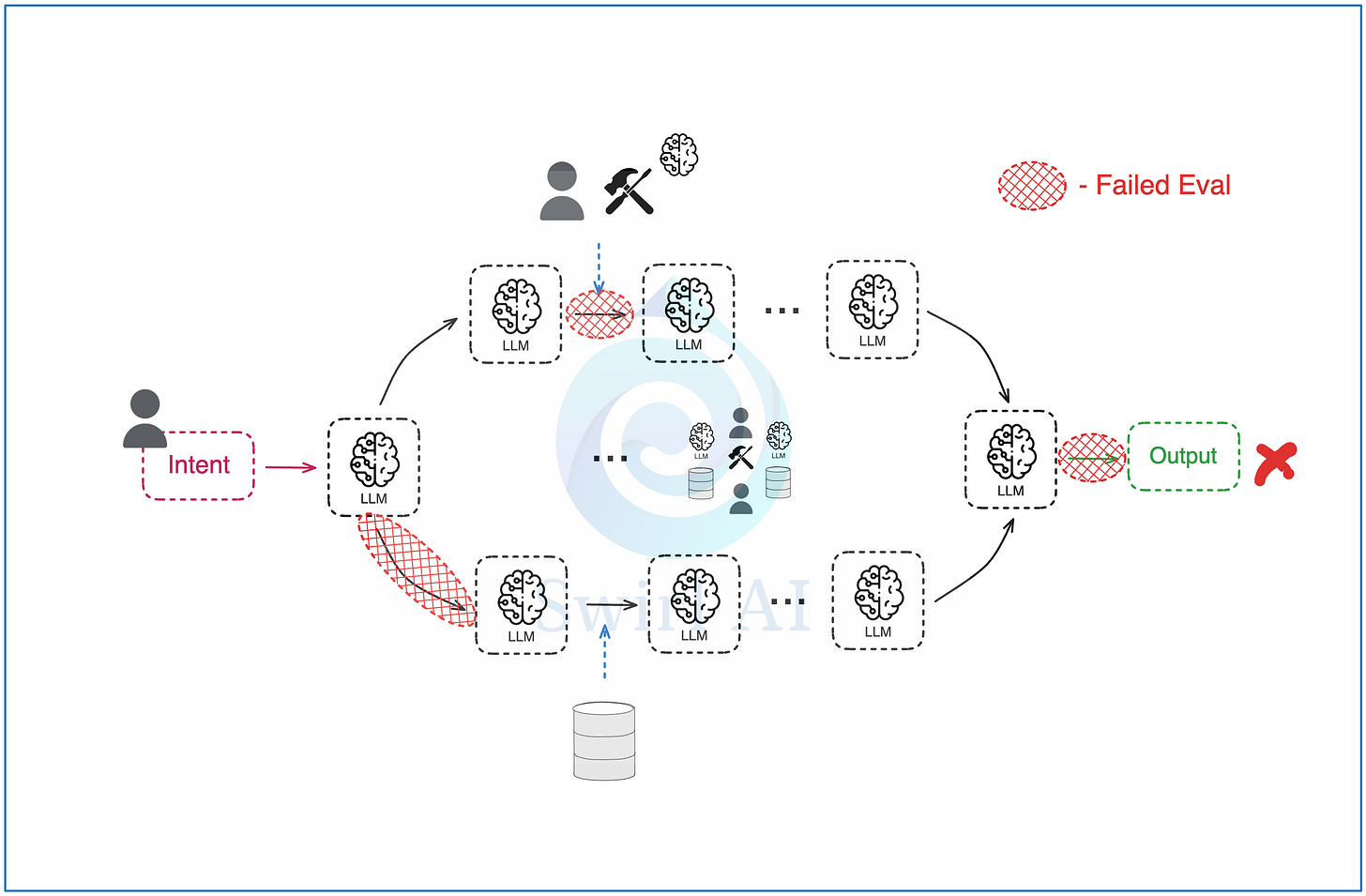

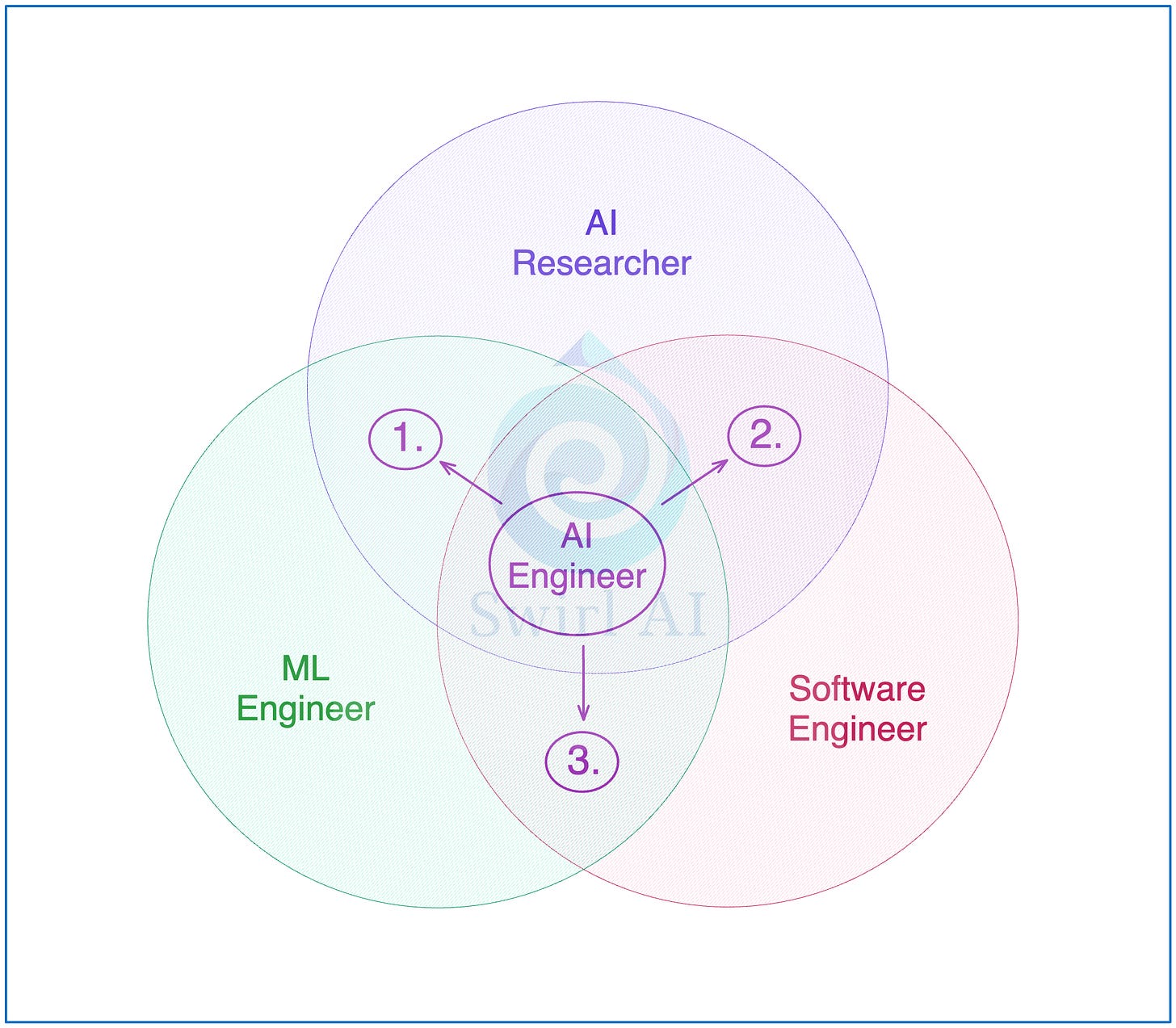

AI Researchers - they are building in the initial stage (marked as 1. in the picture above) of LLM application lifecycle. Either pre or post-training the LLMs that would later be used in AI systems. In recent months more and more emphasis is being put on post-training phase as it seems we are finally reaching the limits of the Scaling Law for LLMs. Out of the research in post-trining we got products like OpenAI o1. The usefulness of it is still limited but with reduction in latency it could significantly enhance the systems that are built on top of such “reasoning” models.

AI Engineers - they are building AI Systems (marked as 2. in the picture above) leveraging pre-trained LLMs to solve real business problems. In past years the practice has evolved from building simple applications that are based on single prompt → answer architecture to more complex Retrieval Augmented Generation systems to then Agentic RAG and eventually to Agents that are capable of more than just answering questions. In the next two years we should be able to reliably deploy more complex multi-agent systems and maybe (more likely end of 2026 or year 2027) fully autonomous agentic systems that would require little guard-railing from human operators.

There is some overlap between what AI Researchers and AI Engineers would work on in their day-to-day if we consider the common definition of roles (point 3.). The field is evolving fast. As mentioned before, more and more research is being poured into the post-training process, it is yet to be seen how much of involvement AI Engineers will have in that, but it seems that it might move more to the side of AI Researchers. The goal of AI Engineer is to take what is already available and stitch up an AI system that would solve the business problem. That does not mean that you don’t need to fine tune a LLM from time to time.

Nowadays, a real production ready AI System is rarely simple. Most of the projects fail because the accuracy of outputs can not be easily increased to the levels that are required (or it is deployed without knowing the accuracy, this always leads to eventual silently failure and causes disastrous outcomes). In order to improve the system we bring some level of agency to it via various methods like:

Routing.

Reflection.

Query rewrites.

…

“The goal of AI Engineer is to take what is already available and stitch up an AI system that would solve a real business problem.”

As the system becomes more complex, most of the nodes in the pipeline produce non-deterministic results. These need to be evaluated because any failure at any step will most likely derail the final output. Evals are HARD and it is not yet a universally solved problem, it is being aggressively researched though. In order to bring robustness to the system we bring additional components like:

Evaluations.

Observability.

Guardrails.

…

A simple example of agency in AI Systems.

A very simple example of Agentic system is a basic Agentic RAG.

These are the steps that describe such system on a high level:

Analysis of the user query: we pass the original user query to a LLM based Agent for analysis. This is where:

The original query can be rewritten, sometimes multiple times to create either a single or multiple queries to be passed down the pipeline.

The agent decides if additional data sources are required to answer the query.

If additional data is required, the Retrieval step is triggered. In Agentic RAG case, we could have a single or multiple agents responsible for figuring out what data sources should be tapped into, few examples:

Real time user data. This is a pretty cool concept as we might have some real time information like current location available for the user.

Internal documents that a user might be interested in.

Data available on the web.

…

If there is no need for additional data, we try to compose the answer (or multiple answers) straight via an LLM.

The answer (or answers) get analysed, summarised and evaluated for correctness and relevance:

If the Agent decides that the answer is good enough, it gets returned to the user.

If the Agent decides that the answer needs improvement, we try to rewrite the usr query and repeat the generation loop.

It is clear that there is a lot of non-determinism added to the regular RAG pipeline, even some cases where the pipeline could go into an infinite reasoning loop if not properly interrupted. We will go step by step into the process of evolving your RAG pipelines in the future Newsletter episodes.

Agentic RAG can already be considered an Agent and it is one of the most common ones in the industry. This is what AI Engineers are dealing with!

AI Engineering vs. ML and Software Engineering.

Lets get this straight, it might seem easy to build a LLM application nowadays. Just connect to a third party API, craft some prompts, connect the app to a chat interface or your e-mail stream and you have yourself an agentic system. And it it is true - it is easy to do exactly that, but the system is only good until it starts breaking apart.

My observation while talking with companies building with LLMs is that most of them are in early stages where Software Engineers have already built initial applications (usually chat bots, chat bot agents or email agents). Unfortunately, Software Engineers are not used to dealing with non-deterministic systems, that is what ML Engineers do. These systems usually lack proper observability or even some sort of basic automated evaluation - organisations are running blind and are relying on human evaluations for products at scale.

Just a year and a half ago I would have said that the existence of this new role called AI Engineer is not justified, back then people were openly discussing how now everyone can be building LLM based apps and ship them to production in days. My opinion changed after building several such apps. It is easy to start, but without proper foundation, the projects will fail - we need AI Engineers.

One could argue that the transition to AI Engineering would be most natural from either ML Engineer, Software Engineer or AI Researcher.

AI Researchers - they are masters of prototyping, coming up with novel ideas and testing their hypothesis. Analyse the output data and come up with novel strategies how to keep continuously improving the models. Deep understanding of statistics and ML fundamentals. Nowadays, very likely they are able to run LLM training on distributed systems themselves.

What they might initially lack in skills is the ability to deploy real world production applications and implementing MLOps best practices in the world of LLMs.

ML Engineers - capable of building and deploying regular Machine Learning models as AI/ML systems with all of the bells and whistles of MLOps. This includes implementation of feedback flywheel and ability to observe and continuously improve the system. Also, ML Engineers are usually involved in Data Engineering to some extent, often utilising ML specific data stores like Feature Stores or Vector DBs.

What they might initially lack in skills is the ability to perform deep research and build production ready high throughput systems as well as implementing and operating regular software best practices.

Software Engineers - they are great! Capable of crafting complex high throughput, low latency systems that are deterministic. Translating business requirements into complex software flows. Masters of DevOps and software engineering best practices, capable of high velocity development and shipping to production in a safe way.

What they might initially lack in skills is the ability to reason in non-deterministic systems and knowledge how to observe and evaluate them. Also, it is not in their nature to continuously learn non software related topics that could completely shift in a day, requiring re-architecture of the entire system.

It is naive to expect that there would be many professionals out there that would be great at all 3 disciplines - thats a unicorn. That is why I think we will usually see AI Engineers possessing a blend of 2 disciplines and fall into the area of either 1., 2. or 3. as depicted in the diagram above. Will we have separate names for these roles? Who knows. The first thing that is coming to my mind would be that maybe the person in 3. could be called AI Systems Engineer or AI Architect. Lets see how it evolves!

Now, there is a trend of new companies started with a lot less engineers outcompeting the incumbents. I believe that full stack AI Engineers will be the best ones positioned to disrupt the market.

What skills would you need to succeed in AI Engineering?

There is so much research happening in the field of Agentic applications that it is hard to keep up. As an example, just recently, there has been a paper released with research around how Prompt Formatting can influence the performance of your LLM applications

Research - white papers need to become your best friend. There is so much research happening in the field of Agentic applications that it is hard to keep up. As an example, just recently, there has been a paper released with research around how Prompt Formatting can influence the performance of your LLM applications. The truth is that with internal data and compute resources at your disposal, you - the AI Engineer - are best positioned to do your own research on what works and what does not, and you should do it for the sake of your employer.

Prompt Engineering - while it might sound simple, the techniques for prompt engineering and formatting are vast. When it comes to agentic systems, you are also dealing with cross agent prompt dependencies, shared state and memory that is also implemented via prompting. On top of this, everything needs to be evaluated so you will need custom evals for any prompt you are crafting coupled with datasets that you can test on.

Software Development - no questions here, the systems you are deploying need to be solid. You need to know and follow software engineering and DevOps best practices.

Infrastructure - one aspect of this is that you need to be able to deploy your own work, you could say it is part of Software Development. Also you need to understand your data and new types of storage systems like Vector DBs. In general, these are not new, but rarely used by non ML Engineers.

Data Engineering - you would be surprised in how much time you would actually spend understanding, cleaning and processing the data that is then used in your AI Systems. Not everything is about prompting, the hardest part is usually integrating the data sources into your AI applications.

MLOps adapted for AI Systems (AgentOps) - we have introduced a lot of good practices into building AI systems in the past ~5 years via the MLOps movement. Most of them should be transferred when building with LLMs.

Evaluation.

Observability. I talk about some of the challenges in observing Agentic systems in one of my articles:

Prompt tracking and versioning.

Feedback and the continuous system improvement flywheel.

“Not everything is about prompting, the hardest part is usually integrating the internal data sources into your AI applications.”

How would your day-to-day look like as an AI Engineer?

Surprisingly, there is a lot of non-engineering related work in your day-to-day as an AI engineer. The organisations are still on a hype train around LLMs, and you would be pushed to implement them wherever, even if there is no good fit. One of your key responsibilities will be to coach the organisation and be at the forefront of deciding where and if LLMs are even needed to solve a business problem.

You will be researching, reading papers and blogs, following what the top players are doing a lot. Remember when Anthropic introduced contextual embeddings? It seems painfully obvious now that it is a good idea, but it took some time until someone wrote about it and made it feasible cost-wise via prompt caching.

You will spend a lot of time figuring out how to build your test datasets so that you can evaluate your systems. Collaboration with your stakeholders will be key, e.g. you will need tight integrations with your front-end systems for feedback implementation.

Only then the engineering part starts! And all of the above are worth it :)

“One of your key responsibilities will be to coach the organisation and be at the forefront of deciding where and if LLMs are even needed to solve a business problem.”

What is the future of AI Engineering?

My prediction is that every company will have a set of agentic flows automating their processes within the upcoming few years. Hence, all companies will need the skills of AI Engineers. On top of it, AI applications will be the ones bringing the most value to the business and will be required to keep up with competition as everyone will be doubling down on this technology.

AI Engineer is positioned to be the hottest role in the upcoming years. The salaries are high and the demand will keep increasing due to the shortage of talent in the field. 2025 will be the year of agents, 2026 - very likely the year of multi agent systems and autonomous agents.

On top of this, AI Engineers will be and are best positioned to take on the task of building new companies with minimal resources. The more full-stack AI Engineer is, the more power she will have at her fingertips.

If you want to break into the role or level up as an AI engineer, be sure to subscribe to the Newsletter. Lets go build!