Everything you need to know about MCP.

And why it is important for how we shape and build Agentic Systems.

👋 I am Aurimas. I write the SwirlAI Newsletter with the goal of presenting complicated Data related concepts in a simple and easy-to-digest way. My mission is to help You UpSkill and keep You updated on the latest news in GenAI, MLOps, Data Engineering, Machine Learning and overall Data space.

MCP (Model Context Protocol) by Anthropic is all over the news this month. I have been following the project since it’s public release via announcement on 25th of November, 2024. In this article I will share my thoughts on why I believe you should start looking into MCP and why it is important for the future of AI Agents and Agentic systems. Here is the outline of what you will find in the article:

Refresher on AI Agents and Agentic Systems.

What is MCP?

Splitting control responsibilities through MCP.

Evolving AI Agent architecture with MCP.

The future roadmap of MCP.

The Only Cloud-Native Kafka Implementation with Jepsen Validation.

Bufstream offers a robust streaming platform built on Protobuf, designed for high-throughput, low-latency data pipelines. Its architecture prioritizes consistency and fault tolerance, as demonstrated by rigorous Jepsen testing - plus it's up to 8x cheaper than self-managed Kafka. Explore the Jepsen Report for detailed analysis of its performance under various failure scenarios. Implement reliable, schema-driven streaming with Bufstream.

Refresher on AI Agents and Agentic Systems.

In it’s simplest high level definition, an AI agent is an application that uses LLM at the core as it’s reasoning engine to decide on the steps it needs to take to solve for users intent. It is usually explained via an image similar to the picture bellow and is composed of multiple building blocks:

Planning - the capability to plan a sequence of actions that the application needs to perform in order to solve for the provided intent. There are many strategies to this, I have written an article about one of them - Reflection, where we built it from scratch without using any LLM Orchestration frameworks:

Memory - short-term and long-term memory containing any information that the agent might need to reason about the actions it needs to take. This information is usually passed to LLM via a system prompt as part of the core. You can read more about different types of memories in one of my previous articles:

Tools - any function that the application can call to enhance it’s reasoning capabilities. One should not be fooled by the simplicity of this definition as a tool can be literally anything:

Simple functions defined in code.

VectorDBs and other data stores containing context.

Regular Machine Learning model APIs.

Other Agents!

…

Here is an article where I implement tool use patter from scratch:

Not all Agentic Systems are given full agency over execution in the environment. Anthropic has also described “Augmented LLMs” where application integrating LLMs as reasoning engines are only given control over tools and memory but not planning. The topology on how the interactions happen is defined in code rather than planned out by the LLM.

What is MCP?

MCP (Model Context Protocol) as defined by Anthropic is:

An open protocol that standardizes how applications provide context to LLMs.

To be more precise it attempts to standardise the protocol on how LLM based applications integrate with other environments.

In Agentic systems, AI Agents or chains of augmented LLMs the context can be provided in multiple ways:

External data - this is part of long term memory.

Tools - the capability of the system to interact with the environment.

Dynamic Prompts - that can be injected as part of the system prompt.

…

Bellow is the high level architecture of MCP.

MCP Host - Programs using LLMs at the core that want to access data through MCP.

MCP Client - Clients that maintain 1:1 connections with servers.

MCP Server - Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol.

Local Data Sources - Your computer’s files, databases, and services that MCP servers can securely access.

Remote Data Sources - External systems available over the internet (e.g., through APIs) that MCP servers can connect to.

Why the need to standardise?

Current development flow of Agentic applications is chaotic:

There are many Agent frameworks with slight differences. While it is encouraging to see the ecosystem flourish, these slight difference rarely add enough value but potentially significantly change the way you write code.

Integrations with external data sources are usually implemented ad-hoc and using different protocols even within organisations. That is clearly true for different companies as well.

Tools are defined in code repositories in slightly different ways. How you attach tools to augmented LLMs is different as well.

Eventually, the goal is to improve the velocity of how fast we can innovate with Agentic applications, how well we can secure them and how easy it is to bring relevant data to the context.

Splitting control responsibilities through MCP.

MCP Servers expose three main elements that are purposely built in a way that helps implement specific control segregation.

Prompts are designed to be User-Controlled.

Programmer of the server can expose specific prompts (suited for interaction with data exposed by the server) that can be injected into the application using LLMs and exposed to the user of the given application.

Resources are designed to be Application-Controlled.

Resources are any kind of data (text or binary) that can be used by the application built to utilise LLMs. The programmer of the application (usually AI Engineer) is responsible of codifying how this information should be used by the application. Usually, there is not automation in that and LLM does not participate in this choice.

Tools are designed to be Model-Controlled.

If we provide agency to our application of how it should interact with the environment we use tools to do that. MCP Server exposes an endpoint that can list all of the tools available with their descriptions and required arguments, application can pass this list to the LLM so that it can decide which tools are needed for the task at hand and how they should be invoked.

Evolving AI Agent architecture with MCP.

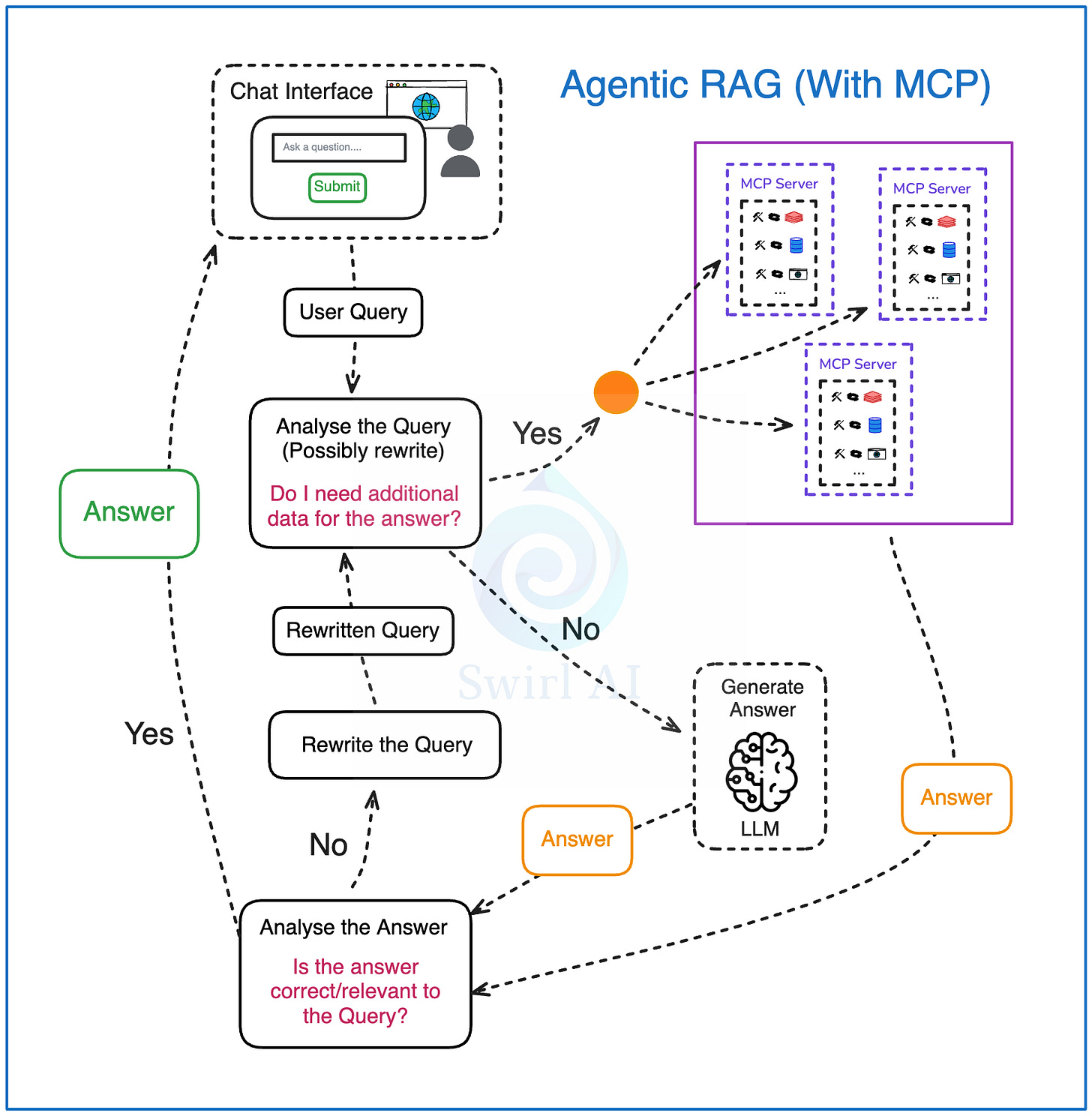

To explain how the architecture of Agentic application could evolve with MCP, let’s take an example of a very simple Agentic RAG.

Here are the steps the System could involve:

Analysis of the user query: we pass the original user query to a LLM based Agent for analysis. This is where:

The original query can be rewritten, sometimes multiple times to create either a single or multiple queries to be passed down the pipeline.

The agent decides if additional data sources are required to answer the query.

If additional data is required, the Retrieval step is triggered. In Agentic RAG case, we could have a single or multiple agents responsible for figuring out what data sources should be tapped into, few examples:

Real time user data. This is a pretty cool concept as we might have some real time information like current location available for the user.

Internal documents that a user might be interested in.

Data available on the web.

…

If there is no need for additional data, we try to compose the answer (or multiple answers or a set of actions) straight via an LLM.

The answer gets analyzed, summarized and evaluated for correctness and relevance:

If the Agent decides that the answer is good enough, it gets returned to the user.

If the Agent decides that the answer needs improvement, we try to rewrite the user query and repeat the generation loop.

How does the architecture change if we introduce MCP into the picture?

We can introduce MCP servers to face all data sources relevant for the retrieval procedure. The MCP server handles retrieval logic through Tools since the LLM will be “choosing” which data sources will be relevant for the system. Here are some benefits to this approach:

We decouple retrieval logic from the topology of the Agentic system.

We can then evolve the retrieval component separately:

Introduce additional tools.

Introduce additional data sources.

Version, evolve and rollback existing tools and data sources.

We can manage security and access to the data via the MCP server.

A separate team can be independently working on the data it is responsible for.

Evolving of the architecture in larger enterprises.

As enterprises grow in size, different teams start owning specific data assets. E.g.:

CRM Data.

Financial Data.

Real time Web clickstream data.

….

It is hard to effectively manage these disparate data sources and MCP brings a lot of value to the game.

Each data domain can manage their own MCP Servers.

All the MCP servers will use the same protocol.

Because of the above, the integration efforts for LLM based applications will be significantly reduced.

AI Engineers can continue to focus on the topology of the Agent.

[IMPORTANT]: This is one of the biggest advantages of using MCP in my opinion. It allows the decoupling of systems in large projects. Different teams can work on their own domains while not disturbing development of the main Agentic topology.

The future roadmap of MCP.

The public roadmap for the next 6 months strongly suggests the strengthening of Cloud Native aspect of the project. Improvements in:

Authentication and authorisation.

Service Discovery.

As well as expanding support for the future of Agentic Systems with focus on:

Hierarchical Agent Systems

Interactive Workflows to improve human in the loop interactions.

Streaming of Results.

That’s it for today, let’s sum up.

While MCP is still relatively rough around the edges, the roadmap does look promising. The fact that the project has the backing by Anthropic also draws a bright future for it when it comes to adoption.

You should keep your eye on the project and potentially start adopting it!

More hands-on content utilising MCP in the future episodes.

Once again, thank you to the sponsors of this Newsletter - Bufstream.

Does each MCP server need to be dedicated to one tech only (alias Tool), like one MCP server for google drive, one for PostgreSQL, one for docker, etc.? we can have one MCP server handling multiple Tools as well right? I meant, either of the patterns are fine to implement, correct?

https://pradyumnachippigiri.substack.com/p/what-every-tech-and-non-tech-guy?r=5ev9w0&utm_medium=ios

I recently wrote this article explaining MCP, in the most simple language as possible. Hope it helps some tech/nontech readers out there.